Streamlining supercomputers

A Los Alamos–developed technology makes supercomputing faster and more efficient.

- Jake Bartman, Communications specialist

For decades in supercomputing, bigger has meant better: A larger machine can produce more complex simulations, yielding more data for researchers. Today, supercomputers such as Los Alamos National Laboratory’s Venado have grown so powerful that a single simulation can produce petabytes of data (a petabyte is a million gigabytes, equivalent to the data that would be produced by more than three years of 24/7 high-definition video recording).

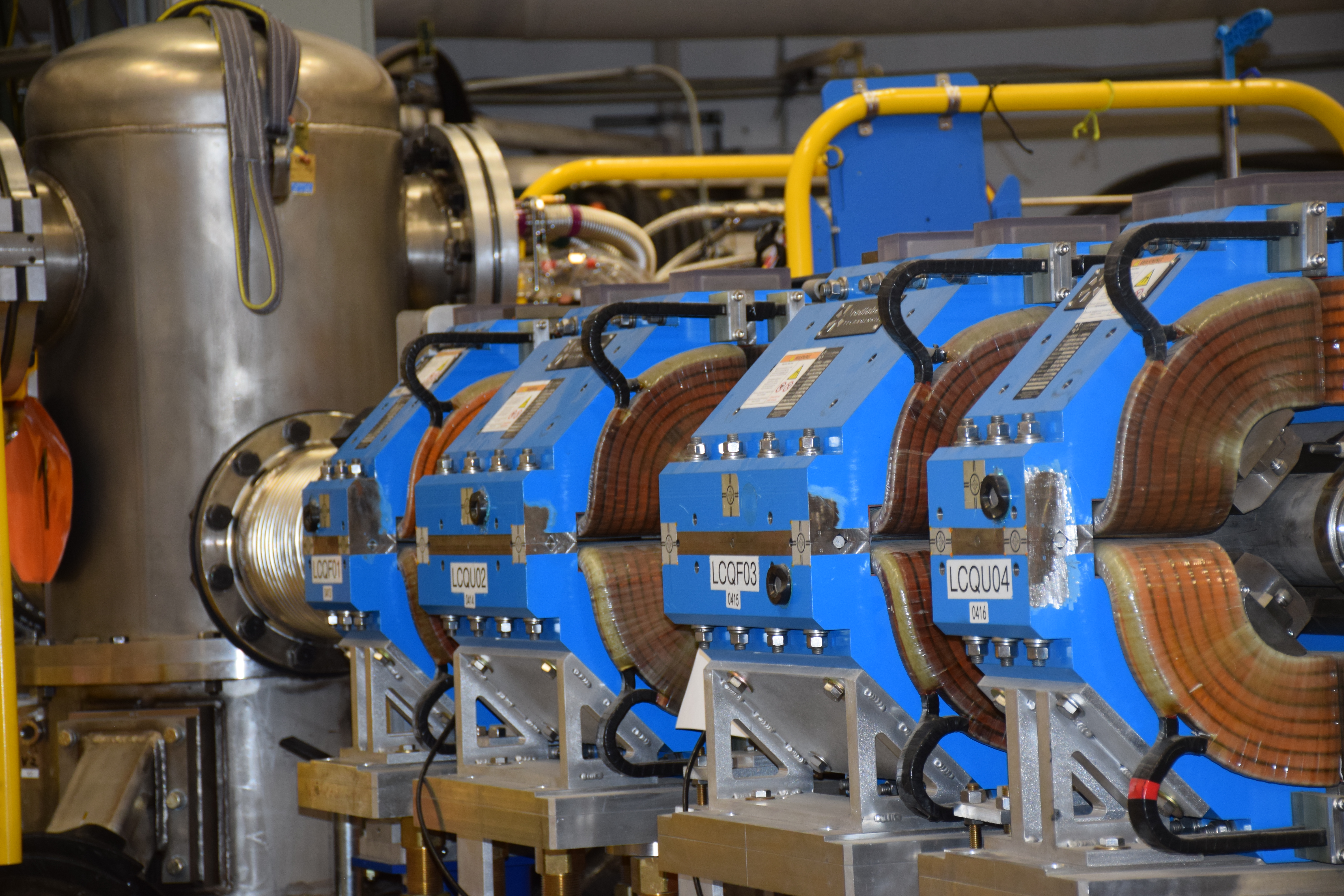

Increasingly, this volume of data is creating bottlenecks for researchers. One bottleneck has to do with the architecture of the supercomputers themselves. Supercomputers such as Venado typically consist of two primary components: a storage platform and a compute platform. The storage platform is responsible for storing the data produced by a simulation, and the compute platform is responsible for analyzing the data. The platforms are connected by a high-speed network that allows for the movement of data between the two.

This architecture makes machines like Venado more flexible. However, there are drawbacks. To analyze the data that’s kept on a machine’s storage platform, the entire dataset must first be transferred to the compute platform.

Transferring a multi-petabyte dataset between platforms can be extraordinarily time consuming, says Qing Zheng, a researcher at Los Alamos. In extreme cases, as much as 88 percent of the total time required to analyze a simulation can be consumed by moving the data. The data-transfer process also consumes computational resources that could be used in other ways (to conduct other simulations, for example).

“Faster storage devices, faster interconnects, and more efficient systems software have greatly improved the capabilities of today’s supercomputers in handling massive datasets,” Zheng says. “But even with these advancements, the growth of data is still outstripping the machines’ capabilities. That is leading to ever-increasing times to transfer datasets.”

Zheng analogizes data movement inside a supercomputer to conducting research at a library. “Today’s analysis pipeline is like visiting a library for a research project, but instead of accessing just what you need, you first have to check out every book in the library and haul those books to your office,” Zheng says. “In addition to being slow and wasteful, this approach also reduces the amount of time that other researchers will have on the supercomputer, preventing them from completing their work.”

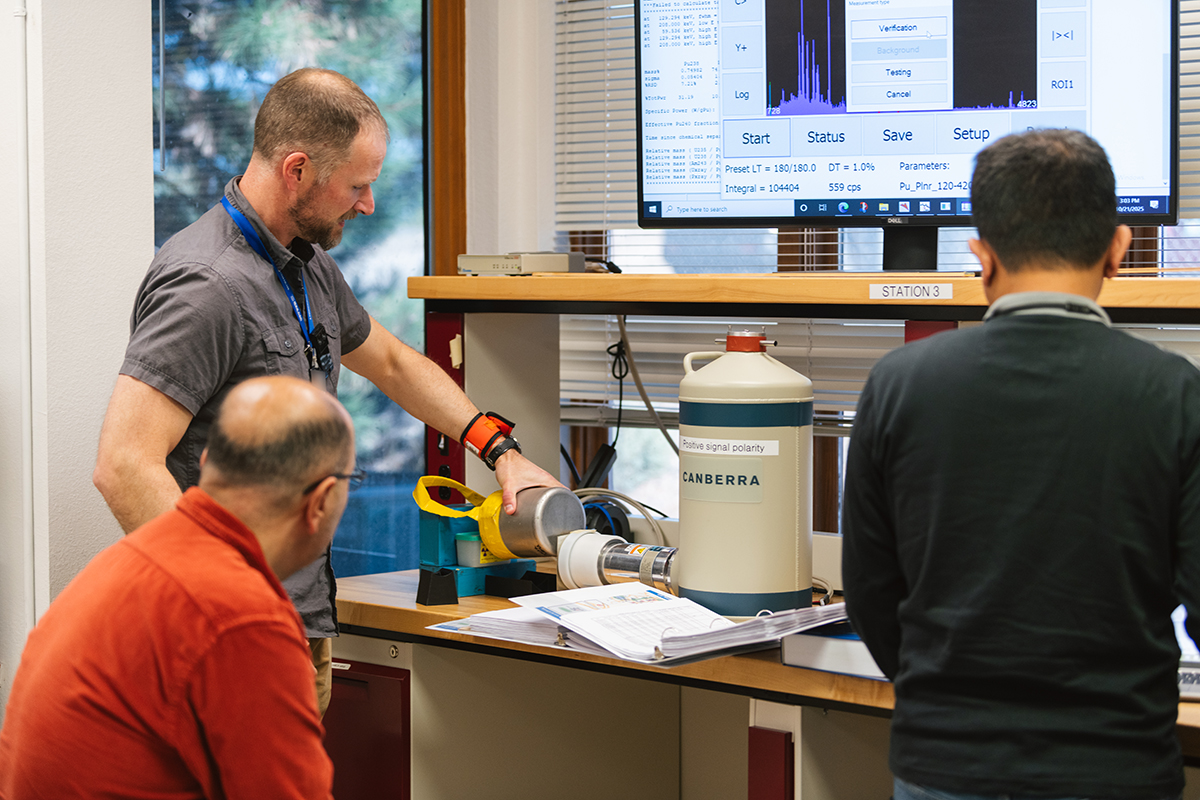

In collaboration with South Korean computational storage manufacturer SK Hynix, Zheng and a team of Laboratory researchers have developed a technology called Object-Based Computational Storage System (OCSS). This technology, which comprises both software and hardware, could substantially speed up data analysis by transferring only the relevant parts of a simulation dataset from the storage platform to the compute platform. In one simulation (of an asteroid crashing into the ocean), researchers using OCSS were able to reduce data movement by 99.9 percent and speed up by 300 percent the creation of a video of the simulated impact.

Beyond supporting national security–related research at Los Alamos, OCSS could benefit industry by accelerating simulations of seismic phenomena, fossil fuel reservoirs, and more. OCSS is designed to follow open standards, which will allow the technology to adapt and integrate with emerging tools such as artificial intelligence.

“As technologies evolve, we want scientists and researchers to be able to stay focused on their discoveries,” Zheng says. “By following open standards and allowing different technologies to communicate, researchers can be confident that their workflows will remain flexible, efficient, and able to grow alongside the next breakthroughs in big data analytics and storage.” ★