Earth & Environmental Sciences

Los Alamos National Laboratory

Los Alamos, NM 87545, USA

Tel: +1 (505) 665-1458

Cell: +1 (505) 412-7159

Email: vvv@lanl.gov

Email: velimir.vesselinov@gmail.com

Web: monty.gitlab.io

My expertise is in applied mathematics, computer science, environmental management and engineering. My research interests are in the general areas of machine learning, data analytics, model diagnostics, and cutting-edge computing (including high-performance cloud, quantum, edge). I am the inventor and lead developer of a series of novel theoretical methods and computational tools for machine learning (ML) and artificial intelligence (AI). I am also a co-inventor of a series of patented ML/AI methodologies. Over the years, I have been the principal investigator of numerous projects. These projects addressed various Earth-sciences problems, including geothermal, carbon sequestration/storage, oil/gas production, climate/anthropogenic impacts, wildfires, environmental management, water supply/contamination watershed hydrology, induced seismicity, and waste disposal. Work under these projects included various tasks such as machine learning, data analytics, statistical analyses, model development, model analyses, uncertainty quantification, sensitivity analyses, risk assessment, and decision support.

My Ph.D. (University of Arizona, 2000) is in Hydrology and Water Resource Engineering with a minor in Applied Mathematics (adviser Regents Professor Shlomo P. Neuman). I joined LANL as a postdoc in 2000 and have been a staff member since 2001.

At LANL, I have been involved in numerous projects related to computational earth sciences, big-data analytics, modeling, model diagnostics, high-performance computing, quantum computing, and machine learning. I have authored book chapters and more than 130 research papers cited more than 2,100 times with h-index 24 (Google Scholar).

For my research work, I received a series of awards. In 2019, I was inducted into the Los Alamos National Laboratory’s Innovation Society.

I am also the lead developer of a series of groundbreaking open-source codes for machine learning, data analytics, and model diagnostic. The codes are actively used worldwide by the community. They are available on GitHub and GitLab.

SmartTensors is a general framework for Unsupervised and Physics-Informed Machine Learning and Artificial Intelligence (ML/AI). In 2021, SmartTensors has been nominated for a R&D100 award.

MADS is an integrated open-source high-performance computational framework for data analytics and model diagnostics. MADS has been integrated in SmartTensors to perform model calibration (history matching), uncertainty quantification, sensitivity analyses, risk assessment and decision analysis based on the SmartTensors ML/AI predictions.

Ph.D., 2000

Department of Hydrology and Water Resources, University of Arizona, Tucson, Arizona, USA

Major: Engineering Hydrology

Minor: Applied Mathematics

Dissertation title: Numerical inverse interpretation of pneumatic tests in unsaturated fractured tuffs at the Apache Leap Research Site

Advisor: Regents Professor Dr. Shlomo P. Neuman

M.Eng., 1989

Department of Hydrogeology and Engineering Geology, Institute of Mining and Geology, Sofia, Bulgaria

Major: Hydrogeology

Minor: Engineering Geology

Dissertation title: Hydrogeological investigation in applying the Vyredox method for groundwater decontamination

Advisor: Professor Dr. Pavel P. Pentchev

More publications are available at Google Scholar, ResearchGate, and Academia.edu.

More presentations are available at SlideShare.net, ResearchGate, and Academia.edu.

More videos are available on my ML YouTube channel.

More reports are available at the LANL electronic public reading room

Over the years, I have been the principal investigator or principal co-investigator of a series of multi-institutional/multi-million/multi-year projects funded by LANL, LDRD, DOE, ARPA E, and industry partners:

In addition to my scientific pursuits, I have always been interested in arts.

My artwork includes drawings, photography, and acrylic paintings.

Unsupervised Machine Learning (ML) methods are powerful data-analytics tools capable of extracting important features hidden (latent) in large datasets without any prior information. The physical interpretation of the extracted features is done a posteriori by subject-matter experts.

In contrast, supervised ML methods are trained based on large labeled datasets The labeling is performed a priori by subject-matter experts. The process of deep ML commonly includes both unsupervised and supervised techniques LeCun, Bengio, and Hinton 2015 where unsupervised Machine Learning are applied to facilitate the process of data labeling.

The integration of large datasets, powerful computational capabilities, and affordable data storage has resulted in the widespread use of ML/AI in science, technology, and industry.

Recently, we have developed a novel unsupervised and physics-informed ML methods. The methods utilize Matrix/Tensor Decomposition (Factorization) coupled with physics, sparsity and nonnegativity constraints. The methods are capable to reveal the temporal and spatial footprints of the extracted features.

SmartTensors is a general framework for Unsupervised and Physics-Informed Machine Learning and Artificial Intelligence (ML/AI).

SmartTensors incorporates a novel unsupervised ML based on tensor decomposition coupled with physics, sparsity and nonnegativity constraints.

SmartTensors has been applied to extract the temporal and spatial footprints of the features in multi-dimensional datasets in the form of multi-way arrays or tensors.

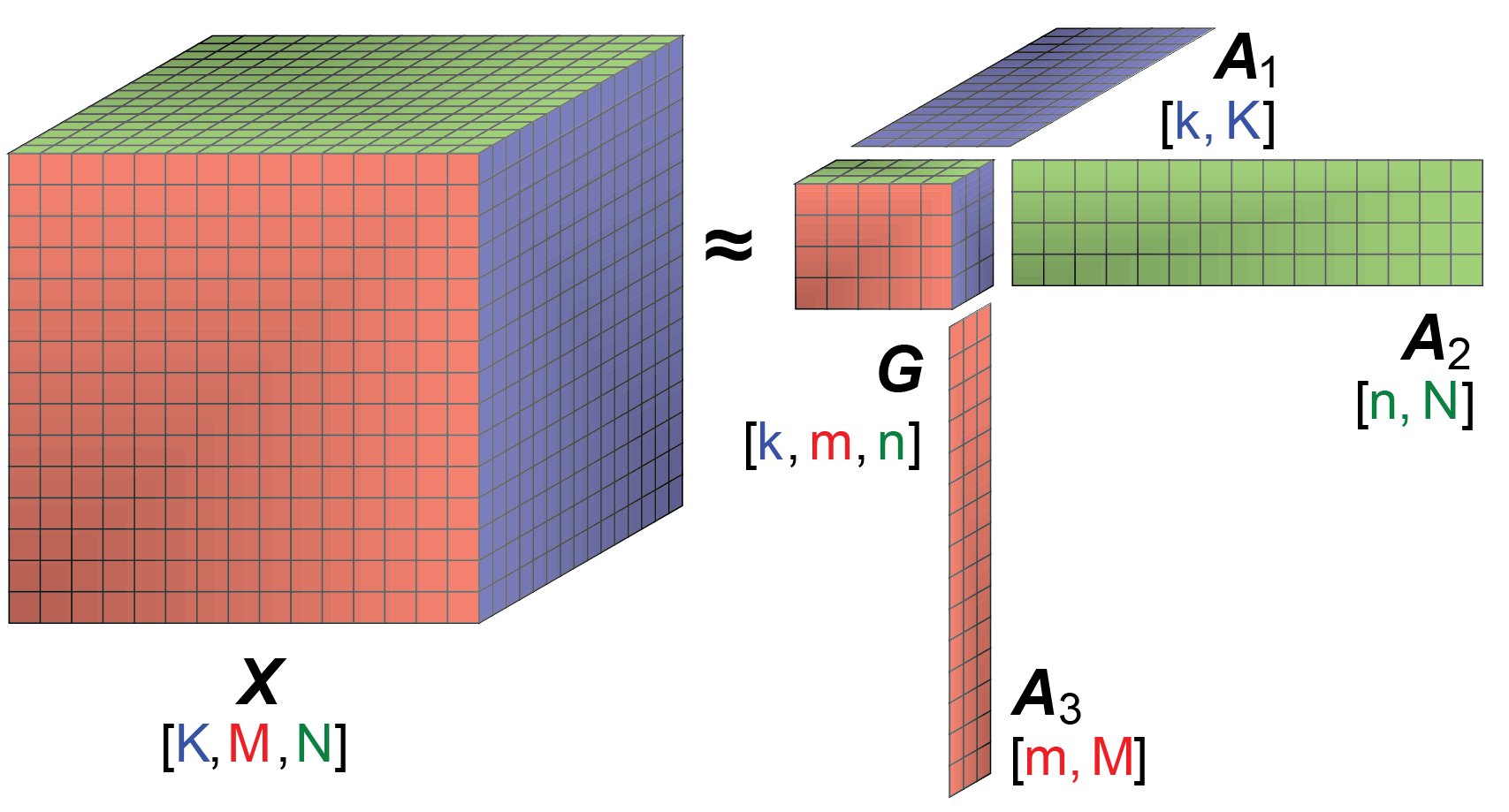

The decomposition (factorization) of a given tensor \(X\) is typically performed by minimization of the Frobenius norm:

$$ \frac{1}{2} ||X-G \otimes_1 A_1 \otimes_2 A_2 \dots \otimes_n A_n ||_F^2 $$

where:

The product \(G \otimes_1 A_1 \otimes_2 A_2 \dots \otimes_n A_n\) is an estimate of \(X\) (\(X_{est}\)).

The reconstruction error \(X - X_{est}\) is expected to be random uncorrelated noise.

\(G\) is a \(n\)-dimensional tensor with a size and a rank lower than the size and the rank of \(X\). The size of tensor \(G\) defines the number of extracted features (signals) in each of the tensor dimensions.

The factor matrices \(A_1,A_2,\dots,A_n\) represent the extracted features (signals) in each of the tensor dimensions. The number of matrix columns equals the number of features in the respective tensor dimensions (if there is only 1 column, the particular factor is a vector). The number of matrix rows in each factor (matrix) \(A_i\) equals the size of tensor X in the respective dimensions.

The elements of tensor \(G\) define how the features along each dimension (\(A_1,A_2,\dots,A_n\)) are mixed to represent the original tensor \(X\).

The tensor decomposition is commonly performed using Candecomp/Parafac (CP) or Tucker decomposition models.

Some of the decomposition models can theoretically lead to unique solutions under specific, albeit rarely satisfied, noiseless conditions. When these conditions are not satisfied, additional minimization constraints can assist the factorization.

A popular approach is to add sparsity and nonnegative constraints. Sparsity constraints on the elements of G reduce the number of features and their mixing (by having as many zero entries as possible). Nonnegativity enforces parts-based representation of the original data which also allows the tensor decomposition results for \(G\) and \(A_1,A_2,\dots,A_n\) to be easily interrelated Cichocki et al, 2009.

SmartTensors algorithms called NMFk and NTFk for Matrix/Tensor Factorization (Decomposition) coupled with sparsity and nonnegativity constraints custom k-means clustering has been developed in Julia

SmartTensors codes are available as open source on GitHub.

Other key methods/tools for ML include:

Presentations are also available at slideshare.net

Data analytics work executed under most of the projects and practical applications has been performed using the wide range of novel theoretical methods and computational tools developed over the years.

Key tools for data analytics include:

Model diagnostics work executed under most of the projects and practical applications has been performed using the wide range of novel theoretical methods and computational tools developed over the years.

A key tool for model diagnostics is the MADS (Model Analysis & Decision Support) framework. MADS is an integrated open-source high-performance computational (HPC) framework.

MADS can execute a wide range of data- and model-based analyses:

MADS has been tested to perform HPC simulations on a wide-range multi-processor clusters and parallel environments (Moab, Slurm, etc.).

MADS utilizes adaptive rules and techniques which allows the analyses to be performed with a minimum user input.

MADS provides a series of alternative algorithms to execute each type of data- and model-based analyses.

MADS can be externally coupled with any existing simulator through integrated modules that generate input files required by the simulator and parse output files generated by the simulator using a set of template and instruction files.

MADS also provides internally coupling with a series of built-in analytical simulators of groundwater flow and contaminant transport in aquifers.

MADS has been successfully applied to perform various model analyses related to environmental management of contamination sites. Examples include solutions of source identification problems, quantification of uncertainty, model calibration, and optimization of monitoring networks.

MADS current stable version has been actively updated.

Professional softwares/codes with somewhat similar but not equivalent capabilities are:

MADS source code and example input/output files are available at the MADS website.

MADS documentation is available at github and gilab.

The C version of the MADS code is also available: MADS C website and MADS C source . A Python interface for MADS is under development: Python

Other key tools for model diagnostics include:

SmartTensors is a general framework for Unsupervised and Physics-Informed Machine Learning (ML) using Nonnegative Matrix/Tensor decomposition algorithms.

NMFk/NTFk (Nonnegative Matrix Factorization/Nonnegative Tensor Factorization) are two of the codes within the SmartTensors perform.

Unsupervised ML methods can be applied for feature extraction, blind source separation, model diagnostics, detection of disruptions and anomalies, image recognition, discovery of unknown dependencies and phenomena represented in datasets as well as development of physics and reduced-order models representing the data. A series of novel unsupervised ML methods based on matrix and tensor factorizations, called NMFk and NTFk have been developed allowing for objective, unbiased, data analyses to extract essential features hidden in data. The methodology is capable of identifying the unknown number of features charactering the analyzed datasets, as well as the spatial footprints and temporal signatures of the features in the explored domain.

SmartTensors algorithms are written in Julia.

SmartTensors codes are available as open-source on GitHub

SmartTensors can utilize various external compuiting platforms, including Flux.jl, TensorFlow, PyTorch, MXNet, and MatLab

SmartTensors is currently funded by DOE for commercial deployment (with JuliaComputing) through the Technology Commercialization Fund (TCF).

MADS (Model Analysis & Decision Support) is an integrated open-source high-performance computational (HPC) framework.

MADS can execute a wide range of data- and model-based analyses:

MADS has been tested to perform HPC simulations on a wide-range multi-processor clusters and cloud parallel environments (Moab, Slurm, etc.).

MADS utilizes adaptive rules and techniques which allows the analyses to be performed with a minimum user input.

MADS provides a series of alternative algorithms to execute each type of data- and model-based analyses.

MADS can be externally coupled with any existing simulator through integrated modules that generate input files required by the simulator and parse output files generated by the simulator using a set of template and instruction files.

MADS also provides internally coupling with a series of built-in analytical simulators of groundwater flow and contaminant transport in aquifers.

MADS has been successfully applied to perform various model analyses related to environmental management of contamination sites. Examples include solutions of source identification problems, quantification of uncertainty, model calibration, and optimization of monitoring networks.

MADS current stable version has been actively updated.

Codes with somewhat similar but not equivalent capabilities are:

MADS source code and example input/output files are available at the MADS website.

MADS documentation is available at github and gilab.

MADS old sites: LANL, LANL C, LANL Julia, LANL Python

WELLS is a code simulating drawdowns caused by multiple pumping/injecting wells using analytical solutions. WELLS has a C and Julia language versions.

WELLS can represent pumping in confined, unconfined, and leaky aquifers.

WELLS applies the principle of superposition to account for transients in the pumping regime and multiple sources (pumping wells).

WELLS can apply a temporal trend of water-level change to account for non-pumping influences (e.g. recharge trend).

WELLS can account early time behavior by using exponential functions (transmissivities and storativities; Harp and Vesselinov, 2013).

WELLS analytical solutions include:

WELLS has been applied to decompose transient water-supply pumping influences in observed water levels at the LANL site (Harp and Vesselinov, 2010a).

For example, the figure below shows WELLS simulated drawdowns caused by pumping of PM-2, PM-3, PM-4 and PM-5 on water levels observed at R-15.

The mode inversion of the WELLS model predictions is achieved using the code MADS.

Codes with similar capabilities are AquiferTest. AquiferWin32, Aqtesolv, MLU, and WTAQ.

WELLS source code, example input/output files, and a manual are available at the WELLS websites: LANL GitLab Julia GitHub

LA-CC-10-019, LA-CC-11-098

MPEST is a parallel version of the code PEST (Doherty 2009).

MPEST has been developed to optimize the solving of parallel optimization problems using distributed computing.

MPEST has been applied in many parallel computing projects worldwide.

MPEST parallel subroutines has been imported and further developed in the code MADS.

The source code, example input/output files, and a manual are available at MADS website.

Project PI: Velimir V Vesselinov

ML4Geo is a project funded by the U.S. Department of Energy ARPA E.

ML4Geo includes collaborators from MIT, Stanford, University of Texas-Austin, JuliaComputing, Descartes Lab, and Chevron.

Project PI: Velimir V Vesselinov

GeoThermalCloud is a project funded by the Geothermal Office of the U.S. Department of Energy EERE (Energy Efficiency and Renewable Energy).

GeoThermalCloud includes collaborators from Google, Descartes Lab, Stanford, and University of Texas-Austin.

The DOE Carbon Storage Assurance Facility Enterprise (CarbonSAFE) initiative focuses on development of geologic storage sites for the storage of 50+ million metric tons (MMT) of carbon dioxide (CO2) from existing industrial sources. There are several ongoing CarbonSAFE projects. They aim to improve understanding of site screening, site selection, characterization, baseline monitoring, verification, accounting (MVA), and assessment procedures related to safe carbon storage and sequestration. They also target to develop the information necessary to develop appropriate permits and to design injection and monitoring strategies for commercial-scale projects. The CarbonSAFE efforts will contribute to the development of 50+ MMT storage sites.

I am the Machine Learning PI of the CarbonSAFE project led by University of Wyoming.

LANL PI: Velimir V Vesselinov

DiaMonD is a project funded by the U.S. Department of Energy Office of Science.

DiaMonD addresses Mathematics at the Interfaces of Data, Models, and Decisions.

DiaMonD involves researchers from Colorado State University, Florida State University, Los Alamos National Laboratory, Massachusetts Institute of Technology, Oak Ridge National Laboratory, University of Texas at Austin, and Stanford University.

More information about the DiaMonD work, collaborators, and publications can be find on the project web site.

Decision Support PI: Velimir V Vesselinov

A consortium of multiple national laboratories has developed high-performance computing capabilities to meet the challenge of waste disposal and cleanup left over from the creation of the US nuclear stockpile decades ago. The project is funded by the Department of Energy Office for Environmental Management (DOE-EM).

Within ASCEM, the goal of the "Decision Support" task is to create a computational framework that facilitates the decision making by site-application users, modelers, stakeholders, and decision/policy makers. The decision-support framework leverages on existing and novel theoretical methods and computational techniques to meet the general decision-making needs of DOE-EM as well as the particular site-specific needs of individual environmental management sites.

The decision-support framework can be applied to identify what kind of model analyses should be performed to mitigate the risk at a given environmental management site, and, if needed, support the design of data-acquisition campaigns, field experiments, monitoring networks, and remedial systems. Depending on the problem, decision-support framework utilizes various types of model analyses such as parameter estimation, sensitivity analysis, uncertainty quantification, risk assessment, experimental design, cost estimation, data-worth (value of information) analysis, etc.

Los Alamos National Laboratory (LANL) is a complex site for environmental management. The site encompasses about 100 km2 (37 square miles) of terrain with 600 m (2,000 feet) of elevation change, and an average rainfall of less than 300-400 mm (12 to 16 inches) per year. The site is intersected by 14 major canyon systems. Ecosystems within the site range from riparian to high desert and boast over 2,000 archaeological sites, as well as endangered species habitats. The surface and subsurface water flow discharges primarily along the Rio Grande to the east of LANL. The Rio Grande traverses the Española basin from north to south; several major municipalities use the river water downgradient from LANL for water supply (Santa Fe, Albuquerque, El Paso/Juarez).

The regional aquifer beneath LANL is a complex hydrogeological system. The regional aquifer extends throughout the Española basin, and is an important source for municipal water supply for Santa Fe, Los Alamos, Española, LANL, and several Native-American Pueblos. The wells providing groundwater from this aquifer for Los Alamos and LANL are located within the LANL site and in close proximity to existing contamination sites. The regional aquifer is comprised of sediments and lavas with heterogeneous flow and transport properties. The general shape of the regional water table is predominantly controlled by the areas of regional recharge to the west (the flanks of the Sierra de los Valles and the Pajarito fault zone) and discharge to the east (the Rio Grande and the White Rock Canyon Springs). At more local scales, the structure of groundwater flow is also influenced by (1) local infiltration zones (e.g., beneath wet canyons); (2) heterogeneity and anisotropy in the aquifer properties; and (3) discharge zones (municipal water-supply wells and springs). The aquifer is also characterized by well-defined, vertical stratification, which, in general, provides sufficient protection of the deep groundwater resources.

The vadose zone, between the ground surface and the top of the regional aquifer, is about 180-300 m (600-1000 ft) thick. The vadose zone is comprised of sediments and lavas with heterogeneous flow and transport properties. The variably-saturated flow and transport through the thick vadose zone occurs through pores and fractures, and is predominantly vertical with lateral deviations along perching zones. The groundwater velocities in the vadose zone are high beneath wet canyons (up to 1 m/a) and low beneath the mesas (1 mm/a). Due to complexities in local hydrogeologic conditions, the hydraulic separation between the regional aquifer and the vadose zone is difficult to identify at some localities, especially where mountain-front recharge is pronounced.

The complexity and size of the LANL site make environmental management a continuing engineering and scientific challenge. Legacy contamination—both chemical and radioactive—exists at many locations. Some of the oldest worldwide radioactive Material Disposal Areas (MDA’s), where waste is buried in pits and shafts, are located on the site. LANL is mandated to follow timetables and requirements specified by the Compliance Order on Consent from the New Mexico Environment Department (NMED) for investigation, monitoring, and remediation of hazardous constituents and contaminated sites.

The environmental work performed at the LANL site is managed by the Environmental Programs (EP) Directorate. A team of external and LANL (Computational Earth Sciences Group, Earth & Environmental Sciences) researchers is tasked by the EP Directorate to provide modeling and decision support to enable scientifically-defensible mitigation of the risks associated with various LANL sites. The principal investigator of this team for more than a decade has been Velimir Vesselinov.

Since the 1950's, the LANL site has been the subject of intensive studies for characterization of the site conditions, including regional geology and hydrogeology. Various types of research have been performed at the site related to contaminant transport in the environment which include (1) laboratory experiments, (2) field tests, and (3) conceptual and numerical model analyses. The work is presented in a series of technical reports and peer-reviewed publications.

Important aspects of the environmental management at the LANL site include:

A chromium plume has been identified in the regional aquifer beneath the LANL site. Our team has been tasked with providing modeling decision support to the Environmental Programs (EP) Directorate to enable scientifically-defensible mitigation of the risks associated with chromium migration in the environment. A large amount of data and information are available related to the chromium site (vadose-zone moisture content, aquifer water levels, contaminant concentrations, geologic observations, drilling logs, etc.); they are used to develop and refine conceptual and numerical models of the contaminant transport in the environment. The development of numerical models and performance of model analyses (model calibration, sensitivity analyses, parameter estimations, uncertainty quantification, source identification, data-worth analyses, monitoring-network design, etc.) is a computationally intensive effort due to large model domains, large numbers of computational nodes, complex flow media (porous and fracture flow), and long model-execution times. Due to complexities in the model-parameter space, most of the model analyses require a substantial number of model executions. To improve computational effectiveness, our team utilizes state-of-the-art parallel computational resources and novel theoretical and computational methods for model calibration, uncertainty analysis, risk assessment, and decision support.

Numerical modeling of flow and transport in the regional aquifer near the Sandia Canyon

The numerical model is capturing current conceptual understanding and calibrated against existing data (taking into account uncertainties)

Regardless of existing uncertainties, the model provide information related to: