Mapping knowledge for machines

Ontology development at Los Alamos supports weapons research, artificial intelligence, and more.

- Jake Bartman, Communications specialist

“When you see a pizza, how do you know it’s a pizza?” asks Craig Blackhart, an engineer at Los Alamos National Laboratory. “You might say, ‘Well, a pizza has sauce, cheese, and crust’—but a calzone has those characteristics, too. So, we have to be more specific.”

Blackhart’s point might seem abstract or philosophical (or reflect the fact that this conversation took place around lunchtime). And yet, the question of how exactly one thing differs from another is central to Blackhart’s work, which involves developing digital schemata called ontologies.

Ontologies are a way of organizing human knowledge in a manner that machines can understand. By developing ontologies that accurately capture key concepts in specific areas of knowledge, it is possible to develop intelligent search engines and other tools that can support national security, augment artificial intelligence (AI) systems, and more.

Depicted visually, an ontology can be laid out like a spider’s web, with different nodes reflecting distinct concepts. For an ontology of pizza, these categories could include “veggie pizza,” “meat pizza,” “margherita pizza,” and others, each connecting to subcategories (such as “mushroom” and “bell pepper”) by logical relationships (“hasTopping,” “hasTopping[some],” and so on). In this way, an ontology is much more flexible than schemata such as hierarchies, where concepts are organized in a top-down manner that can miss the nuances of relationships between concepts (the characteristics that allow humans to determine whether a particular food item is a pizza or a calzone, for example).

Ontologies allow machines to understand the ways in which objects’ definitions are complex and context dependent. Consider tomatoes, for example. Technically, a tomato is a fruit. And yet, if you order a veggie pizza, tomato will almost certainly be one of the toppings. Humans understand intuitively that where pizza is concerned, the definition of “tomato” can shift—a fact that a machine might not grasp.

“The difficulty is that a tomato can be considered a fruit or a vegetable, depending on context,” Blackhart says. “The idea of an ontology is to try to capture nuances like these and then draw relationships between concepts.”

Recognizing such context-dependent relationships can help search engines understand a query and provide relevant results. For example, Blackhart says, consider a search query about a “stinky pizza”—that is, a pizza topped with a pungent cheese such as gorgonzola. A simple search engine queried with the terms “stinky pizza” might only return results that explicitly include the phrase “stinky pizza.” By contrast, a search engine that relies on an ontology could understand that stinkiness is a property of certain cheeses, automatically retrieving results that include the word “gorgonzola” instead.

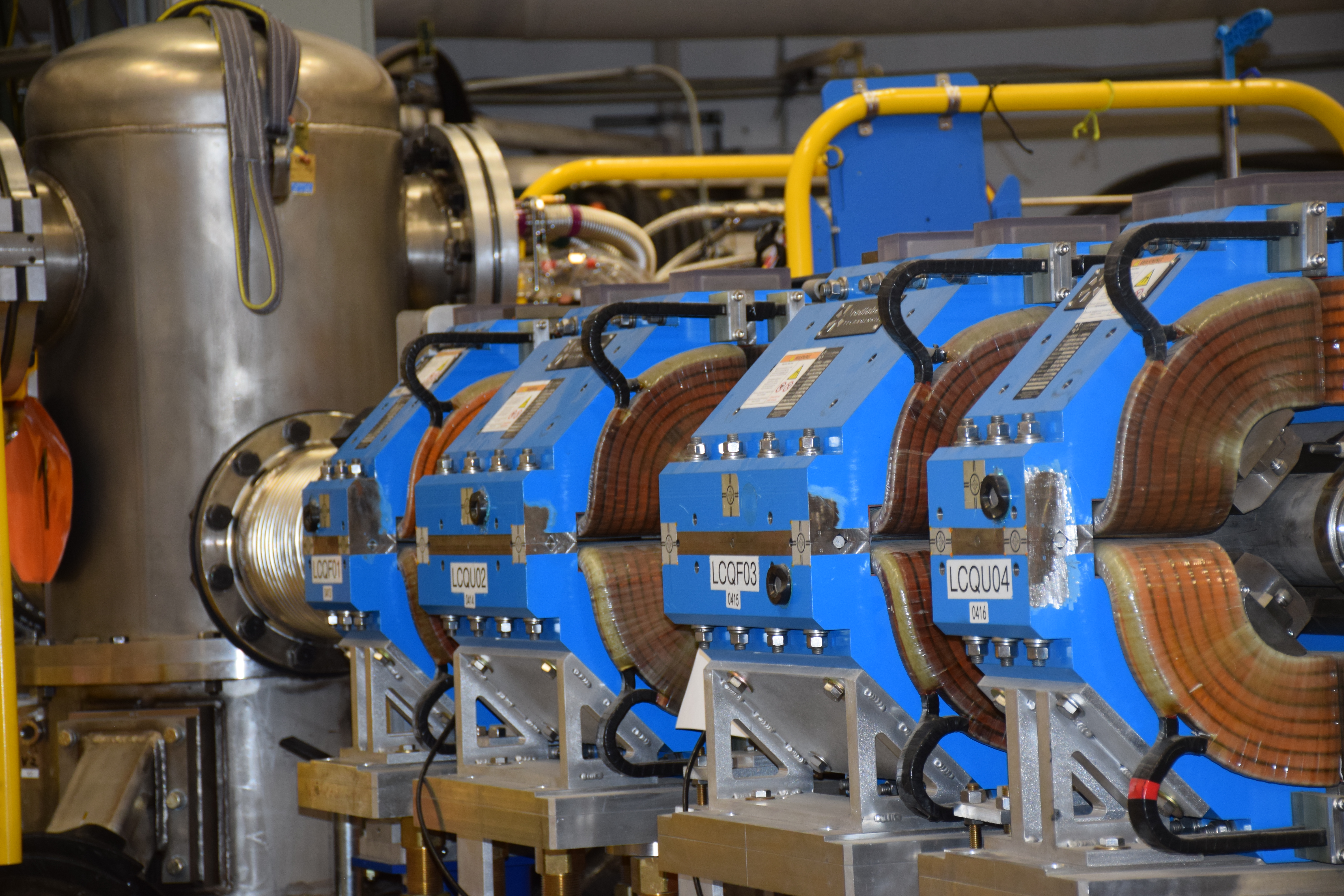

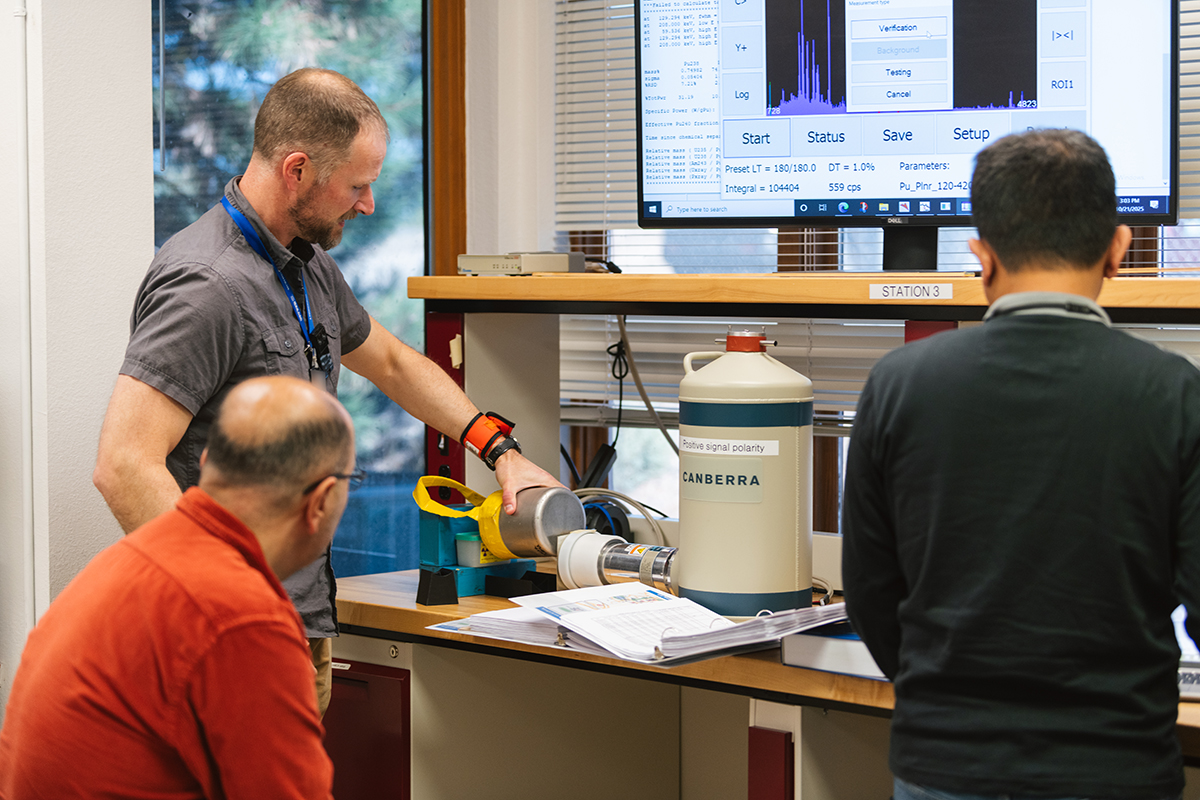

At Los Alamos, Blackhart is working to develop ontologies that capture and reflect knowledge related not to pizza, but to nuclear weapon design. The Laboratory is home to the National Security Research Center (NSRC)—an archive containing tens of millions of documents that track more than eight decades of nuclear weapons research and development, including records both from Los Alamos and the former Rocky Flats Plant in Colorado.

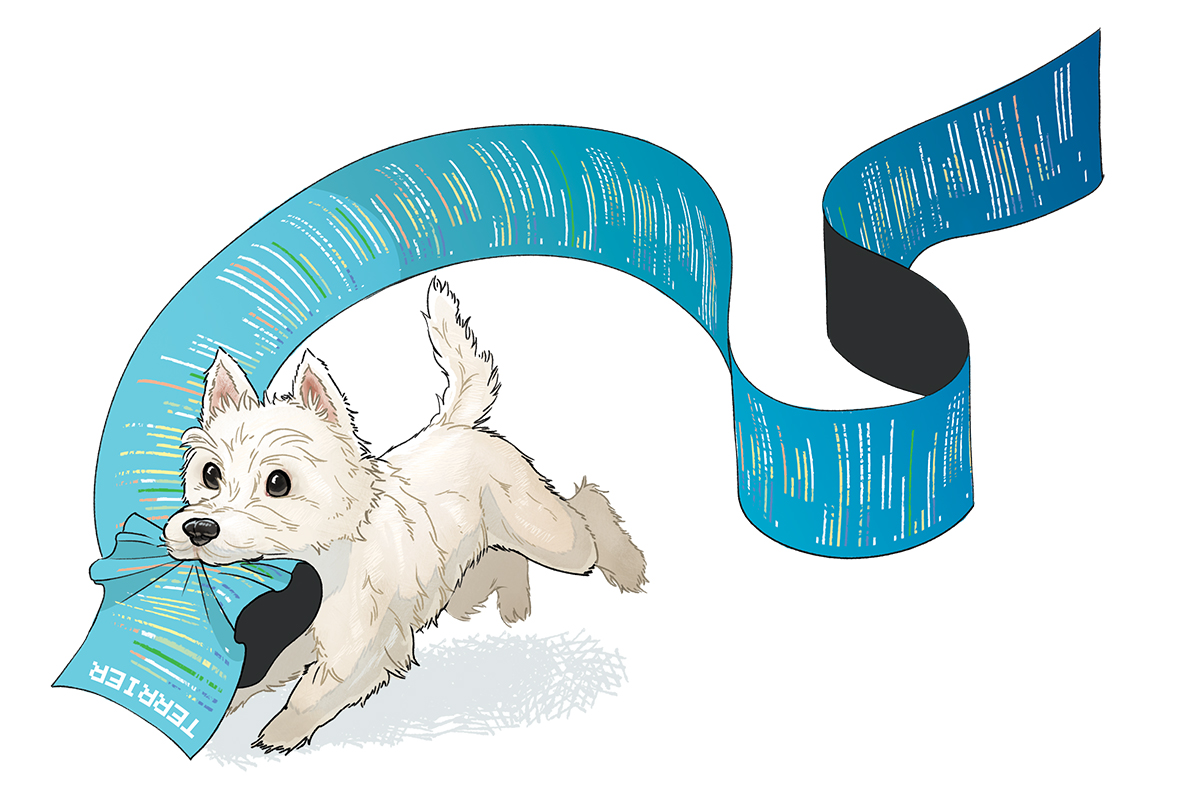

The sheer size of this archive makes accessing information within it difficult. For the better part of a decade, Blackhart and other engineers have been working with the NSRC to develop an ontology that captures the nuances of weapons knowledge, informing an intelligent search engine called Terrier/Compendia that will allow weapons researchers to easily find relevant documents in the NSRC’s archives.

Developing an ontology of weapons knowledge has involved conducting extensive interviews with weapons experts at Los Alamos and using the program Protégé to map out the relationships between concepts. Among other things, the ontology can capture the fact that physicists, engineers, and manufacturers might use different terms to refer to the same concepts, helping ensure that the search engine retrieves all the documents that are relevant to a given query, for example. Blackhart expects that Compendia will be available for use on the Laboratory’s classified network in the next several months.

Blackhart has also been exploring the use of ontologies to augment AI systems. A shortcoming of large-language models (LLMs) such as ChatGPT is their tendency to “hallucinate” information, which can cause the systems to provide incorrect answers to queries. One way to make LLMs more accurate could be by using a technique called retrieval-augmented generation. An LLM’s user could enter a query (“Is a tomato a fruit or a vegetable pizza topping?”), prompting the LLM to retrieve automatically an ontology about pizza against which its output can be evaluated. This could ground the system’s answer in verified knowledge, helping ensure a more accurate answer.

Although Blackhart has only evaluated this approach on smaller AI systems, the results have been promising, and he hopes to evaluate the use of ontologies with LLMs in the future. Such a technique could help keep AI systems honest—and not just about pizza. ★