Not Magic…Quantum

A commercial quantum computer now exists, and it just arrived at Los Alamos.

- Eleanor Hutterer, Editor

A famous physicist once said, “If you think you understand quantum mechanics, you don’t understand quantum mechanics.” That physicist was Richard Feynman—Los Alamos alumnus, wisecracker, and Nobel laureate—describing a mind-bending subfield of physics wherein the rules of classical mechanics seem to vanish in a puff of smoke.

During Feynman’s years at Los Alamos, the fledgling laboratory’s “computers” were mostly women, many the wives of scientists, who sat at desks for eight hours a day, computing by hand the complex calculations required by the Manhattan Project. Shortly thereafter, the top-secret ENIAC (Electronic Numerical Integrator And Computer)—located in Pennsylvania and regarded as the first general-purpose electronic computer—helped post-war Los Alamos scientists to refine nuclear weapons and to explore other weapons technologies. Soon Los Alamos officials recognized the need for on-site leading-edge computing technologies. The first and second MANIAC computers (Mathematical Analyzer, Numerical Integrator, And Computer) were built in-house during the 1950s and 60s. In the 1970s supercomputers came on the scene and the Laboratory was first to purchase Cray, Connection Machine, and IBM supercomputers. At present, the Laboratory is installing its latest Cray supercomputer, a classical-computing beast dubbed Trinity, which once installed will be one of the most advanced computers in the world. But last month the newest addition to the Lab’s family of futuristic computers arrived, and it’s a horse of a different color: a quantum computer with potentially extraordinary capabilities that are just beginning to be explored.

Quantum computers have long been on the horizon as conventional computing technologies have raced toward their physical limits. (Moore’s Law, an observation that the number of transistors that can fit onto a computer chip doubles every two years, is nearing its expiration date as certain features approach the size of atoms.) And to be certain, general-purpose quantum computers remain on the horizon. However, with the acquisition of this highly specialized quantum computer, Los Alamos, in partnership with Lawrence Livermore and Sandia National Laboratories, is helping to blaze the trail into beyond-Moore’s Law computing technology. This new machine could be a game changer for simulation and computing tools that support the Laboratory’s mission of stockpile stewardship without nuclear testing. It may also enable a slew of broader national security and computer science applications. But it will undoubtedly draw a community of top creative thinkers in computational physics, computer science, and numerical methods to Los Alamos—reaffirming the Lab’s reputation as a computing technology pioneer.

Weird science

Albert Einstein famously rejected parts of the theory of quantum mechanics. His skepticism is understandable. The theory, after all, said that a single subatomic particle could occupy multiple places at the same time. A particle could move from one location to another without traversing the space between. And multiple particles that had previously interacted and then separated by vast distances, could somehow “know” what each other was up to. It didn’t seem to align with what scientists thought they knew.

Einstein’s friend and contemporary, Niels Bohr, argued in favor of the theory and embraced its peculiarities, declaring, “Everything we call real is made of things we cannot call real.” Einstein and Bohr publically hashed it out over the years in a series of collegial debates that delved deep into the philosophy of nature itself. Bohr’s view prevailed and science has since borne it out. Even though Einstein was never fully satisfied by it, quantum mechanics is now generally accepted as the fundamental way of the world.

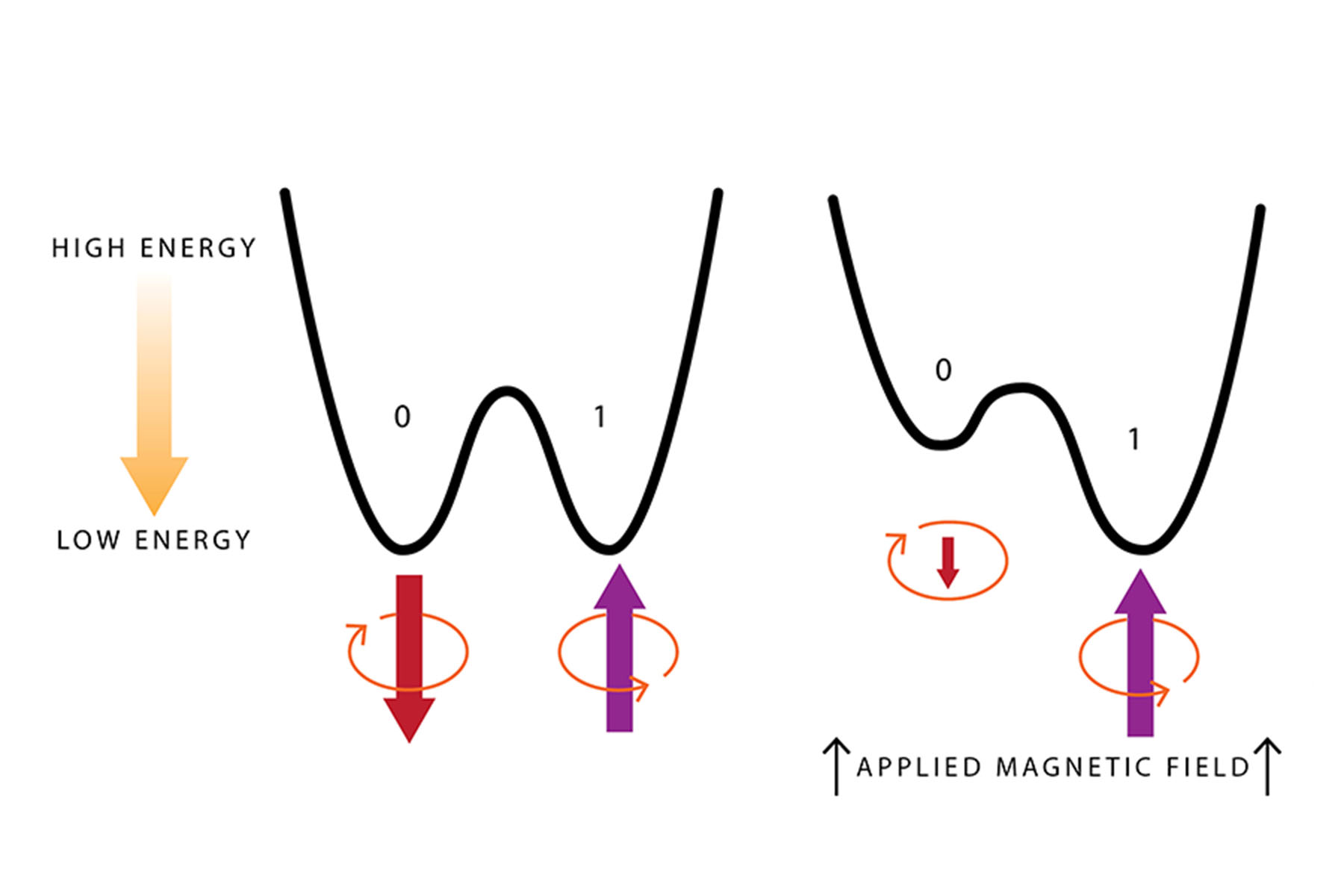

One of the hard-to-get-your-head-around concepts at the heart of quantum mechanics is called superposition. Simplistically, superposition is the idea that something can be in multiple states at the same time. A single electron can have both up and down spin, a single photon can travel both this path and that one, and, conceptually, a luckless cat in a box can be both dead and alive. Until you check, that is. Once the electron’s spin is measured, or the photon is tracked, or the box lid is lifted, the system goes classical and assumes either one state or the other.

All photos courtesy of D-Wave Systems, Inc.

The lifting of the lid causes decoherence—another oddity of the quantum world. For a system to exist in a state of superposition it must not interact with its environment at all, including observers or scientific instruments. The loss of any information from the system to the environment—the lid being lifted and the condition of the cat becoming known—causes the system to decohere. This is why a taxi driver can’t choose the fastest route to the airport by taking them all at once—the car, the road, the driver, the very atoms in the air, everything in the macroscopic world interacts in innumerable ways. Everything we can touch or see or record, by virtue of being touched or seen or recorded, decoheres, and therefore appears classical, not quantum.

Particles that interact with one another enter into a strange relationship with one another. This relationship, known as entanglement, is preserved as long as the two particles remain sheltered from the rest of the environment, lest their entanglement decohere. For example, if two electrons were entangled in such a way that they must necessarily spin in the same direction—but are initially in a superposition of both possible directions—then the instant one of them assumes a firm spin orientation (due to a measurement, perhaps), the other assumes the same orientation as well. Derisively branded by Einstein as “spooky action at a distance,” this phenomenon holds even if the two electrons have moved thousands of light years apart.

Einstein wasn’t entirely convinced, but quantum mechanics is now accepted as the way the world is.

Superposition, decoherence, and entanglement are head-scratchers to be sure. But that doesn’t make them any less real. If these weird principles of science can be harnessed somehow, then it might become possible to really blow some curtains back.

Wiring the weirdness

A classical computer uses bits as units of information. The term “bit” comes from “binary digit,” which illustrates its two-state nature—it must always be in one of two states, which is denoted by a 1 or a 0. The two states can be just about any binary set—open and closed, on and off, up and down, positive and negative—and the state of the bit describes the state of some part of the physical device.

Five hundred qubits can test more possibilities in the blink of an eye than there are atoms in the visible universe.

A quantum computer, on the other hand, relies on quantum bits or “qubits” as units of information. A qubit can also be just about any two-state thing, like the spin of an electron or a photon, that avoids decoherence and therefore acts in a quantum way. The power of a quantum computer comes from the superposition of its qubits—they are all in both states at the same time. So, while a system of three classical bits can assume any of eight different configurations (000, 001, 010, 100, 101, 110, 011, 111), it can only try one at a time. But a system of three qubits can try all eight configurations at once. For problems with large numbers of possible answers, a quantum computer would be ideal because a qubit processor could evaluate all possibilities at once and present the correct answer in an instant. So a quantum cab driver could indeed try every route to the airport at once to find the fastest one.

It follows to ask, then: To build a quantum processor, how can individual electrons or photons be completely, utterly isolated from the environment in such a way as to maintain their quantum state but also be wired up to an interface so that macroscopic, human operators can use the computer?

They can’t.

Yet.

That’s what quantum computer developers are working on. The current state of the art for general-purpose quantum computers is less than 10 qubits—housed in isolation, not wired to an interface—in various laboratories around the world. And that in itself is a pretty big deal.

About 500 qubits can test, simultaneously, more possibilities than there are atoms in the visible universe. For a very specific kind of quantum computer, called a quantum annealing computer, the current state of the art is over 1000 qubits, housed in an extremely cold chamber, inside a big black box. And this is what recently rolled off the truck at Los Alamos.

Qubits and pieces

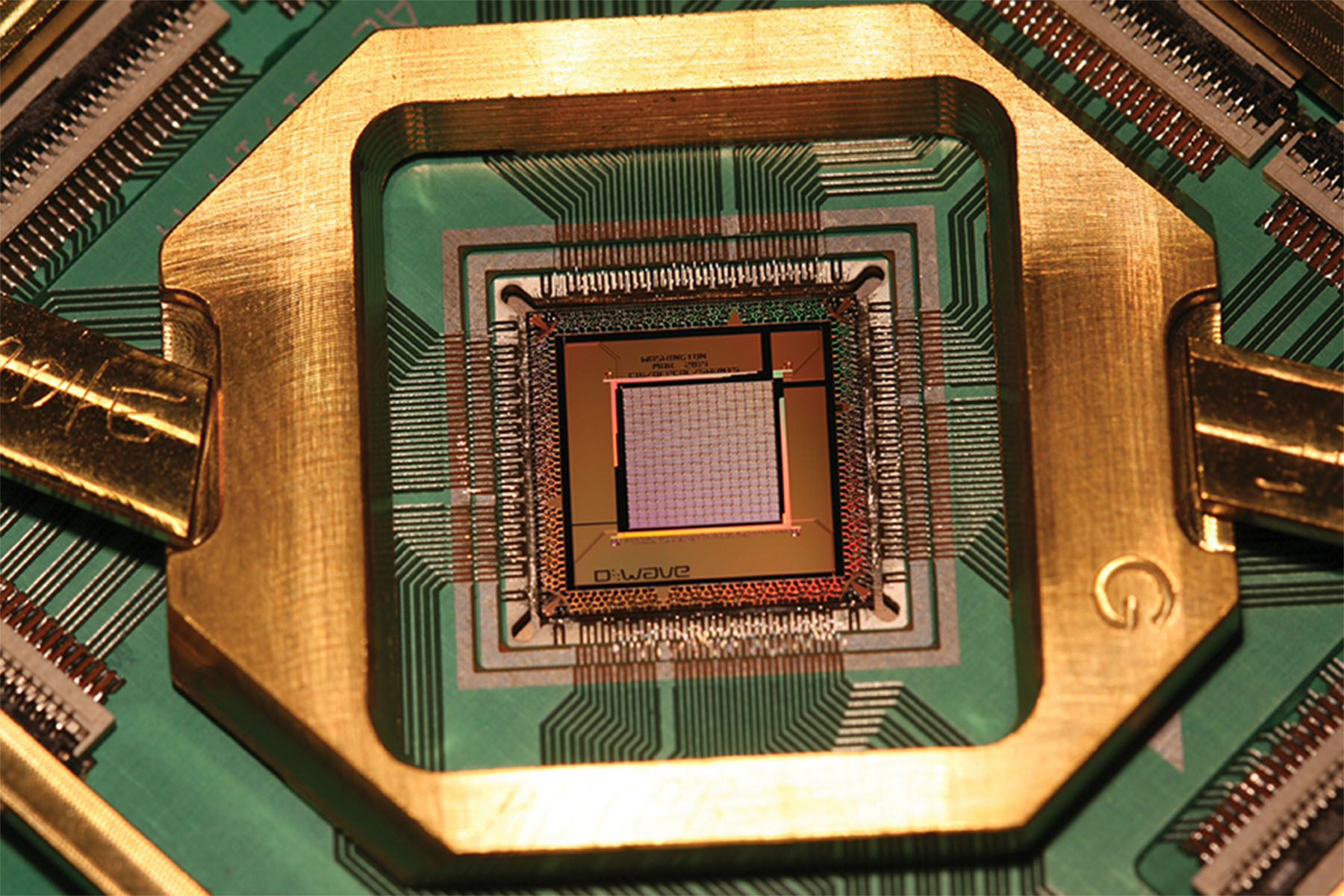

The computer is made by the Canadian company D-Wave Systems and is its third-generation quantum annealer, called the 2X. How did D-Wave build a qubit-based processor when qubits are so hard to handle? Well, it had two good tricks up its sleeve. The first was focusing only on annealing, rather than trying to build a general-purpose processor. The second trick involved the qubits themselves.

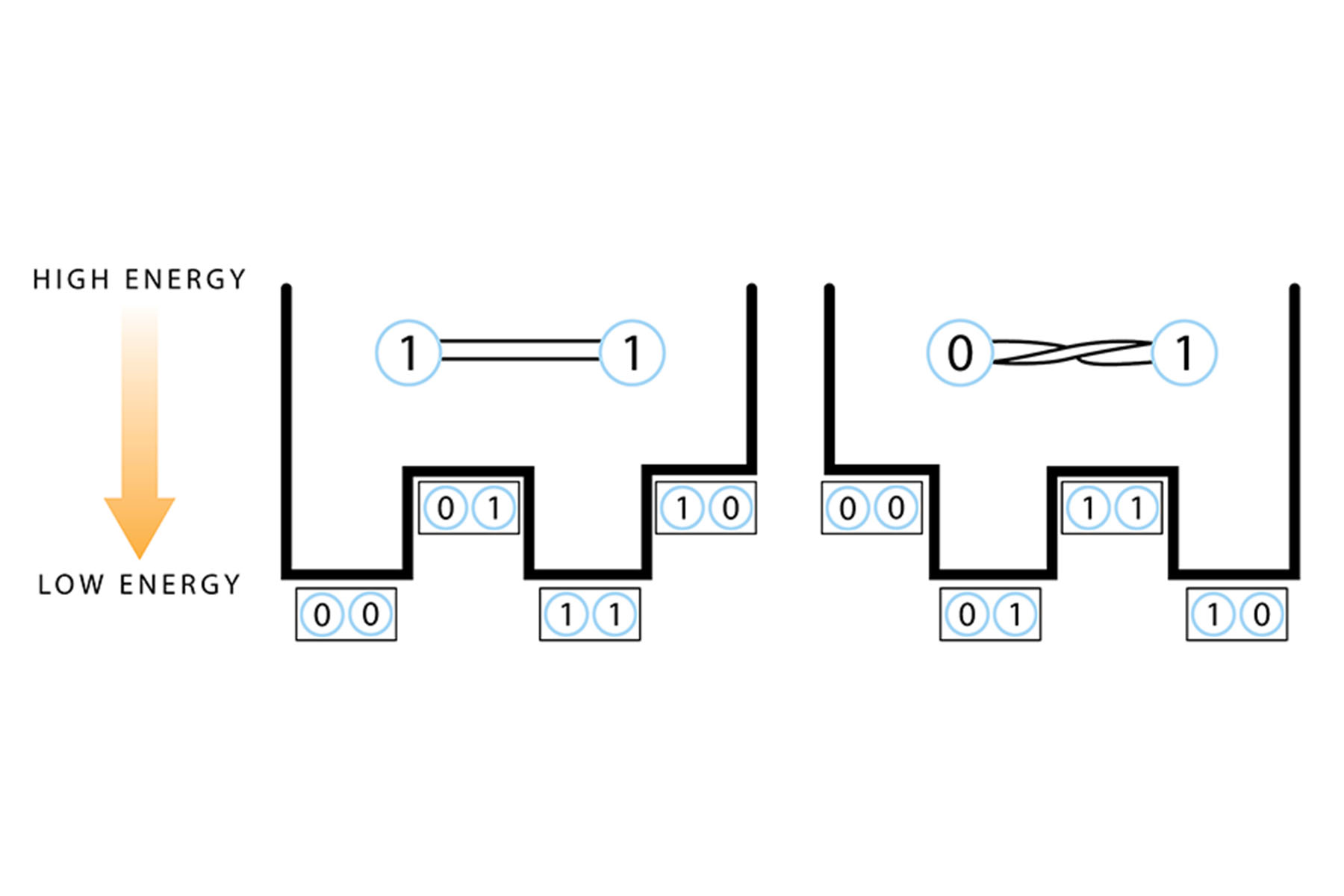

Annealing is a term that refers to something going from a highly disorderd state to a less disordered state. In metallurgy, annealing is used to make metal stronger through high heating followed by slow cooling. The atoms in the metal gain energy from the heat, and then as they cool, they settle in to a less disordered, very low-energy configuration. In computing, annealing refers to solving a problem by finding the minimum energy state. What a quantum annealing computer is good for is optimization problems, which aim to find the best, or least-disordered, lowest-energy solution from a large range of possible solutions. A useful example is the traveling salesman problem: If a traveling salesman must visit all the cities in his territory and wind up back where he started, what is the best route and method of transportation to minimize time and cost? Should he travel by car, train, plane, or bus? Should he travel in a zigzag, radial, or random pattern? Is there any road or airport construction to consider? And what about fuel prices? Optimization problems are among the kinds of problems that conventional computers struggle with. So D-Wave chose to focus on building a processor that specializes in them.

The second thing that D-Wave did differently involved its choice of qubit. Decoherence is one of the biggest hurdles to building a quantum computer—a qubit is a qubit only as long as it doesn’t decohere. And how can you manage decoherence if your qubit is a single photon? It turns out a qubit needn’t be so small. A macroscopic qubit is the key, and rather than a cat in a box it turned out to be a squid in a freezer.

Superconducting quantum interference devices, or SQUIDs, have been used in a wide variety of applications that depend on measuring very small magnetic fluctuations. From portable medical imaging devices to earthquake prediction to gravitational-wave detection, ultrasensitive SQUIDs have been the key to some major scientific breakthroughs, in addition to quantum computing. In the D-Wave 2X, SQUIDs create small magnetic fields that encode all the processor’s 1s and 0s.

A temperature several hundred times lower than the temperature of interstellar space is required for proper function of the SQUIDs. At this temperature, the metal niobium, a superconductor, begins to exhibit distinct quantum mechanical effects on a much larger scale than is ordinarily possible, making it an ideal material for the quantum processor. The SQUIDs in a 2X are long, skinny, niobium wire loops arranged in groups of eight on a grid. At normal temperatures each loop can run an electric current in either a clockwise or counterclockwise direction, which creates either an “up” or “down” magnetic field. But when the temperature plunges to a frigid 10 millikelvins, the SQUIDs become superconducting, and quantum mechanical effects turn on. The electric current runs in both directions simultaneously, and the SQUIDs become qubits.

The annealer reports to a classical computer on the outside of the big black box so the user can see the result. The unique environment surrounding the chip, which requires not just extremely low temperature but also extremely low pressure and magnetic field, is maintained with a series of 16 filters and shields. But underneath all that, the business end of the processor is unexpectedly small—about the size of a thumbnail. And the big black box isn’t actually full of insulation or banks of electronics—it’s mostly empty. It’s only that big so that a person can fit comfortably inside to perform occasional mundane maintenance or processor modification.

Solving an optimization problem with the 2X isn’t remotely like making a spreadsheet on a conventional computer. “You wouldn’t want to use it to balance your checkbook,” explains John Sarrao, the Laboratory’s Associate Director of Theory, Simulation and Computation. “If you need to get an exact answer, then any beyond-Moore’s-Law technology is going to be a poor choice. But if quick and close is good, then D-Wave is the one.” That’s because it doesn’t necessarily give a precise right answer, it gives a very good answer. And with repeated query, the confidence in that answer grows.

The question has to be framed as an energy minimization problem, so that the answer will exist in the low spots of an energy landscape. Imagine a golf course with hills and dips and occasional holes, and the goal is to get a ball into the hole whose bottom sits closest to the center of the earth. A classical computer has to drop a few balls and hope that one rolls into one of the deeper holes. With the 2X, the balls can explore all the holes at once and can even burrow underground from one hole to a deeper hole, as long as those holes aren’t too far apart (this action is called tunneling and is yet another curiosity of quantum mechanics).

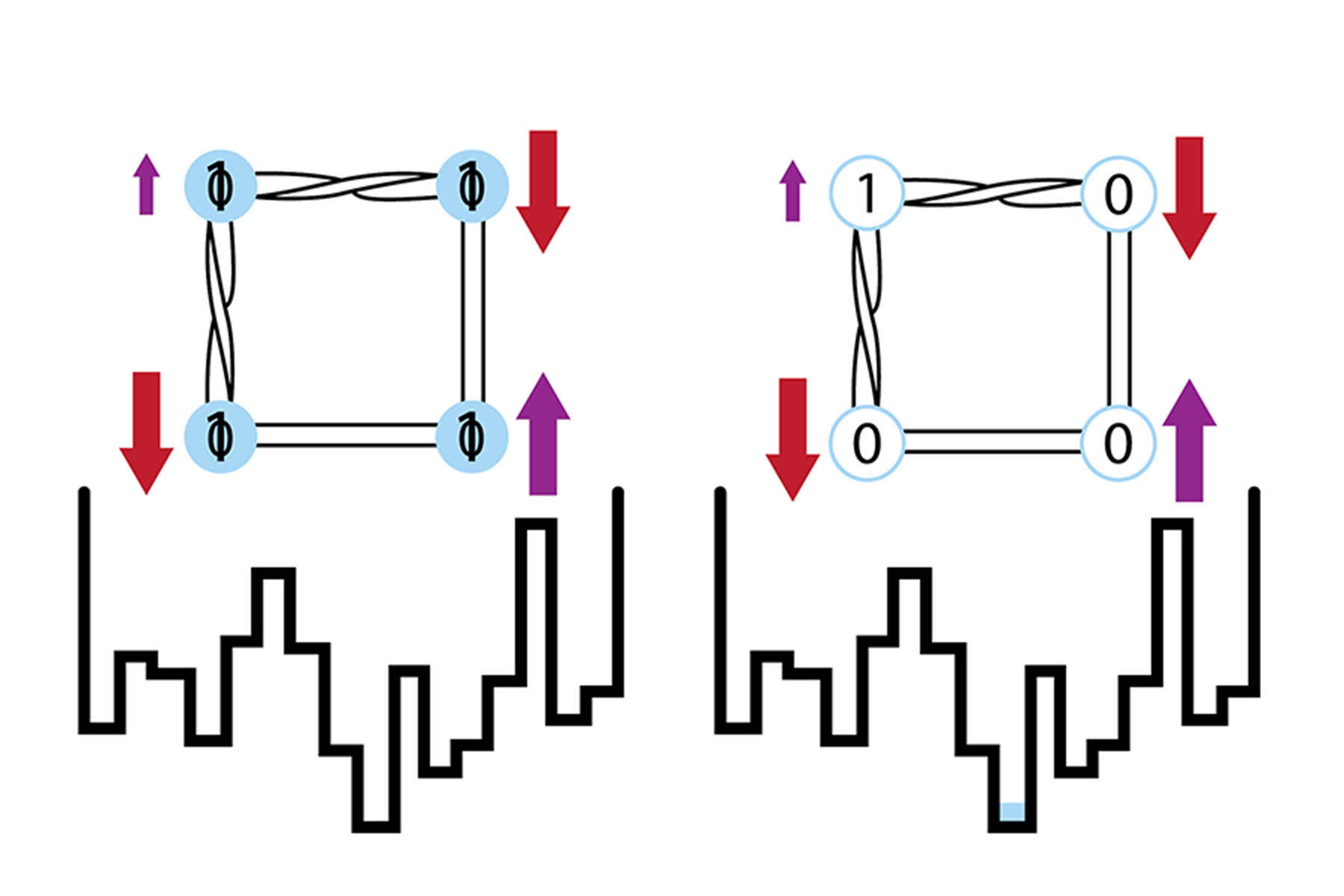

Each question demands its own custom golf course, which the scientist using the machine must construct through biasing and entangling the qubits. This is basically how the quantum computer is programmed. Biases are achieved with magnetic fields applied to individual qubits, and entanglement is done with devices called couplers, which are superconducting loops. The couplers work by lowering the energy of the preferred state in comparison to the alternative, increasing the likelihood that the qubit will take the preferred state. The scientist chooses a whole set of “same” and “opposite” couplings between the qubits to build a unique energy landscape, for which the annealing process finds the lowest energy required to form those relationships. The more complicated the landscape, the more likely quantum annealing is to find an answer more accurate answer than conventional optimization would provide.

SQUIDs have been key to major breakthroughs before, like portable medical imaging, earthquake prediction, and gravitational-wave detection.

The control circuitry for creating the energy landscape—for standardizing the qubits, creating interactions between qubits, turning quantum effects on and off, and reading out the final answer—take up most of the processor chip and most of the user’s time. While the computation itself is lightning quick, setting up the problem takes a lot of time and that’s where initial research will be focused. Right now it takes many hours of planning to run a millisecond experiment, but the more the scientists work with it, the better they’ll get at the planning, and the more use the machine will get.

D-Wave of the future

Los Alamos and other entities with D-Wave systems in residence (the Los Alamos machine is the third D-Wave machine to be sited outside D-Wave headquarters) aren’t customers so much as they are collaborators. No one really knows everything the machine can do, and the best way to find out is to get it into the hands of a bunch of scientists.

“It’s an investment in learning,” says Mark Anderson of the Weapons Physics Directorate who spearheaded the effort to bring a D-Wave 2X to Los Alamos. “We are building a community of scientists who want to explore the capabilities and applications of quantum-annealing technology.” There is already a short queue of users at Los Alamos, who have been running experiments on a 2X machine at D-Wave headquarters in Canada. But now that there is one here, the line, and the excitement, is growing.

In 1982, in the keynote lecture of a theoretical physics conference, Feynman spoke about the possibilities of quantum computing. “Nature isn’t classical, dammit,” he said in his concluding remarks, “and if you want to make a simulation of nature, you’d better make it quantum mechanical, and by golly it’s a wonderful problem, because it doesn’t look so easy.”

And he was right.

People Also Ask

What is quantum computing?

Quantum computing is an approach to computation based on principles of quantum mechanics such as superposition and entanglement. Quantum computers can solve problems that are beyond the capabilities of classical computers.

What is a qubit?

A qubit, or quantum bit, is the quantum version of a bit, the fundamental unit of information in computing. A bit can represent one of two values, typically 0 or 1. A qubit must be able to exist in a superposition of both states and be able to be contained and manipulated so as not to be influenced by other qubits or its environment.