Science Highlights, February 4, 2015

Awards and Recognition

Earth and Environmental Sciences

Correlation between climate and wildfires during an extreme drought

Materials Physics and Applications

Mapping multiple quantum transitions in the antiferromagnet CeRhIn5

Materials Science and Technology

A quantification and certification paradigm for additively manufactured materials

Awards and Recognition

Los Alamos simulation project is a finalist in ANSYS Hall of Fame competition

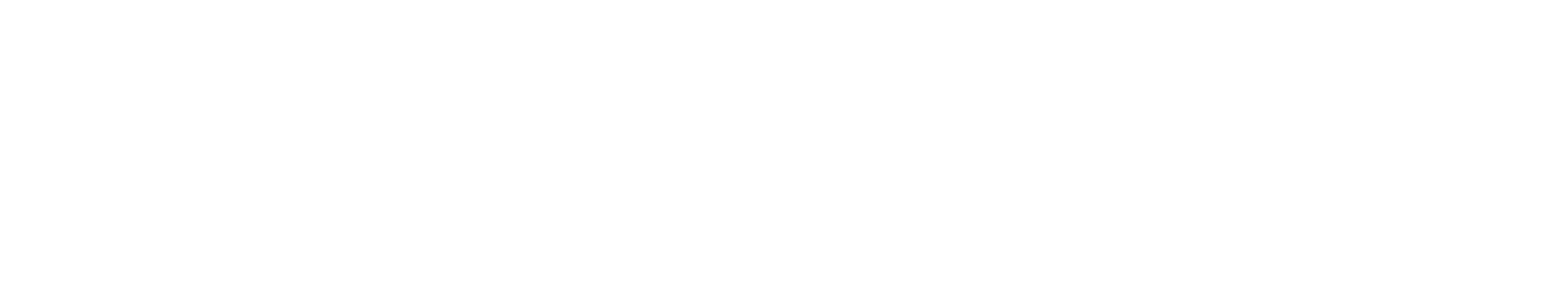

The ANSYS Hall of Fame competition showcases some of the most complex engineering simulation images and animations, from users all over the world, of this commercially available engineering simulation tool. Los Alamos National Laboratory (Mechanical and Thermal Engineering, AET-1) researchers and collaborators placed in the finalist category for using the computational fluid dynamics (CFD) module in ANSYS called FLUENT. ANSYS is among the leading industrial software packages for numerical simulations (structural, thermal, etc.) and computational fluid dynamics. This achievement recognizes and brings global exposure to the diverse science and advanced engineering done by the Laboratory and the AET-1 team.

A RPSEA (Research Partnership to Secure Energy for America) project that examines issues surrounding deep sea oil drilling produced the simulation image. Offshore oil platforms for ultra-deep water drilling are floating structures subject to vortex-induced motion (VIM) from the sea current, which can jeopardize safety. ANSYS Fluent simulations analyzed the motion of the floating structure resulting from complex fluid–structure interactions and vortex shedding, resulting in an improved process for platform design and novel strategies for reducing VIM.

Energy resources are finite. Because two thirds of the Earth is covered by water, one needs to go into deeper and more challenging water depths to exploit energy resources. Design of the floating structure for offshore engineering for ultra-deep water drilling is a challenging task. Drilling starts thousands of feet under the sea surface, with no infrastructure in place. Motion of the platform due to vortex shedding (vortex-induced

Vortex-induced motion is a complex problem with high Reynolds number turbulent flow (transitional and supercritical flow regimes), and fluid-solid interaction phenomena. The available experimental data are very limited, especially from the field measurements (in full scale, structure size is on the order of a football field). Statistical analysis of the structure motion requires long simulation times. The large length scales, complex physics, and long transient simulation time necessitates substantial computational resources.

In the vortex-induced motion study, the researchers were primarily interested in the motion of the floating structure resulting from complex fluid-structure interaction and vortex shedding due to sea current. The key design parameters are the amplitudes and frequencies of the characteristic body movements, in this case cross-flow translation (sway) and z-rotation (yaw). The team performed a comprehensive parametric sensitivity study in which they tested different turbulence models [Unsteady Reynolds Averaged Navier Stokes model (URANS), Improved Delayed Detached Eddy Simulation model (IDDES)] and meshing schemes (Y+ sensitivity, flow separation, resolution of the vortices in the near wake). The researchers validated the computational fluid dynamics results using extensive testing in a tow-tank facility.

The team optimized and validated the computational fluid dynamics approach for practical industrial applications for offshore engineering, using different industrial computational fluid dynamics solvers: ANSYS Fluent and AcuSolve. The researchers achieved a milestone on the laboratory level of running industrial/commercial numerical packages on LANL’s high performance computing Turquoise network for the first time. More general, immediate benefits include: 1) the improved process for design optimization for large floating structures, and 2) possible novel strategies for vortex-induced motion mitigation. Of particular interest are the simulations in full scale and effects of damping from the mooring system and risers. The simulations reveal insight on the physics of vortex shedding and vortex-induced motion at different length and time scales.

Figure 1. Offshore platform design to minimize the vortex-induced motion (VIM) due to sea current. Vortex shedding as visualized from transient numerical simulations. Images show the submerged portion of different semi-submersible floater designs.

Project team members included postdoc Seung Jun Kim and Principal Investigator Dusan Spernjak (Mechanical and Thermal Engineering, AET-1), Houston Offshore Engineering, Red Wing Engineering, John Halkyard Associates, and MARIN.

The DOE Research Partnership to Secure Energy for America (RPSEA) program funded the work through a CRADA (Cooperative Research and Development Agreement) at Los Alamos. RPSEA is a consortium of U.S. energy research universities, industry, independent research organizations, and state and federal agencies. The program is designed to enable the development of new technologies necessary to produce more secure, abundant and affordable domestic energy supplies from reservoirs in America.

The research supports the Laboratory’s Energy Security mission area and the Information, Science and Technology science pillar through simulations to enable selection of optimized designs for offshore deep water oil drilling stations. LANL’s Institutional Computing enabled the computational resources for this research. Technical contact: Dusan Spernjak, AET-1

Bioscience

Phenix software enables 3-D studies of “molecular machines”e

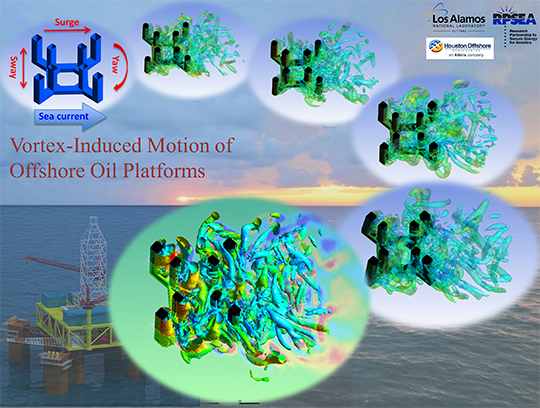

Proteins are the “molecular machines” of life, and knowledge of their three-dimensional (3-D) structures is crucial for understanding how they work. An international team of researchers, including Los Alamos National Laboratory, has improved the Phenix (Python-based Hierarchal ENvironment for Integrated Xtallography) software to enable analysis of X-ray diffraction data that could not be analyzed previously. The team showed that powerful statistical methods could be applied to find metal atoms even if they do not scatter X-rays very differently than the other atoms in the protein. The journal Nature Methods published the work.

The Laboratory was part of the team that originally developed Phenix, a software suite for the automated determination of molecular structures using X-ray crystallography and other methods. The Phenix package includes the SOLVE/RESOLVE software as well as many additional powerful algorithms. More information: http://www.phenix-online.org/

Some molecular machines contain a few metal atoms or other atoms that diffract X-rays differently than the carbon, oxygen, nitrogen, and hydrogen atoms that make up most of the atoms in a protein. The Phenix software finds those metal atoms first, and then uses their locations to find all the other atoms. However, metal atoms must be incorporated into most molecular machines artificially for successful X-ray diffraction and analysis.

Figure 2. Structure of a membrane protein (cysZ) determined with Phenix software using data that could not be analyzed previously.

Los Alamos contributed to the major new development by incorporating powerful statistical methods developed by members of the Phenix team at Cambridge University into automated procedures for determining molecules structures. The Los Alamos team showed that even atoms such as sulfur that are naturally part of almost all proteins can be readily found and used to generate a 3-D picture of a protein. Now that it will often be possible to see a 3-D picture of a protein without artificially incorporating metal atoms into them, many more molecular machines can be studied. Understanding how molecular machines work is key for developing new therapeutics, treating genetic disorders, and developing new ways to make useful materials.

Reference: “Macromolecular X-ray Structure Determination using Weak, Single-wavelength Anomalous Data,” Nature Methods, published online December 22, 2014; doi: 10.1038/nmeth.3212. Authors include Gábor Bunkóczi, Airlie J. McCoy, and Randy J. Read (Cambridge University); Nathaniel Echols, Ralf W. Grosse-Kunstleve, and Paul D. Adams (Lawrence Berkeley National Laboratory, LBNL); James M. Holton (LBNL and the University of California-San Francisco); and Thomas C. Terwilliger (Biosecurity and Public Health, B-10).

The Phenix software has been used to determine the three-dimensional shapes of over 15,000 different protein machines and has been cited by over 5000 scientific publications. Researchers from Lawrence Berkeley National Laboratory, Los Alamos National Laboratory (Thomas Terwilliger, B-10), Duke University, and Cambridge University comprise the Phenix development team.

The National Institutes of Health (NIH) funded the LANL work, and the NIH and the Phenix Industrial Consortium sponsor Phenix. The research supports the Laboratory’s Global Security and Energy Security mission areas and the Information, Science, and Technology science pillar through the development of software to analyze protein structure and enable improved insight on the workings of molecular machines. Technical contact: Thomas Terwilliger

Earth and Environmental Sciences

Correlation between climate and wildfires during an extreme drought

Two papers published by Earth System Observations (EES-14) researchers and collaborators describe the conditions leading up to the catastrophic Las Conchas Fire and contemporaneous fires across the southwestern United States in 2011, as well as the overall trend of fire in the American Southwest and its relationship to atmospheric moisture. LANL former postdoc Park Williams (now at Lamont Doherty Earth Observatory, Columbia University) led the studies.

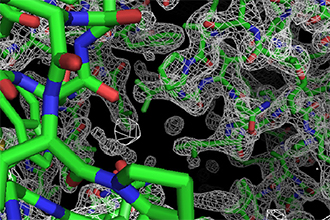

A paper published in the Journal of Applied Meteorology and Climatology, examines the extreme 2011 drought in the Southwest. Droughts are typically caused when the atmosphere’s demand for moisture (in the form of precipitation and potential evaporation) is greater than its supply; 2011 was the perfect example of the influence of this atmospheric moisture demand on wildfires. Although temperature usually dictates whether the atmosphere’s moisture demand is high or low, the amount of water vapor in the air is also important. In spring and early summer of 2011, exceptionally low atmospheric moisture content combined with fairly warm air to result in a record high total burned area in the Southwest and individual fires with energy unfamiliar to career firefighters in the region (Figure 3).

Figure 3. Surface climate anomalies in 2011 for (a) log(precipitation), (b) daily maximum temperature, (c) dewpoint, and (d) vapor-pressure deficit (VPD). For each variable, the period of 3-6 months during August 2010 – July 2011 with the strongest anomaly in the Southwest is shown. Maps show spatial distribution as standard deviations from the 1895-2014 mean. Time series show annual values averaged across the Southwest region, with red dots indicating 2011 values. In the maps, red polygons bound the Southwest, black contours represent drought anomalies of 2 standard deviations, and yellow areas indicate locations of 2011 fires

According to climate models, such as the Coupled Model Intercomparison Project (CMIP5), the Southwestern atmosphere should not become as dry as it did in 2011. The causes of the dry air in 2011 were most likely natural in origin, driven by an interaction of atmospheric, oceanic, and land surface conditions. However, 2011 should serve as a warning of possible variations in climate in the Southwest. The year provided a glimpse of how wildfires may behave in the future, when warmth causes atmospheric moisture demand in an average year to reach 2011 levels. Modeling projections suggest that in a warmer future, if another anomalous event occurs where 2011-type atmospheric dynamics cause extremely low atmospheric water vapor content, the effects of this would be superimposed upon the effects of background warmth. The result would cause the atmospheric moisture demand to be unprecedentedly extreme and might cause catastrophic wildfire consequences if the amount of fuels is not limiting.

Reference: “Causes and Implications of Extreme Atmospheric Moisture Demand during the

Record-Breaking 2011 Wildfire Season in the Southwestern United States,” Journal of Applied Meteorology and Climatology 53, 2671 (2014); doi: 10.1175/JAMC-D-14-0053.1. Authors include A. Park Williams and Richard Seager (Columbia University), Max Berkelhammer, Thomas Swetnam, and David Noone (University of Colorado – Boulder); Alison Macalady and Michael Crimmins (University of Arizona); Anna Trugman (Princeton University); Nikalous Buenning (University of Southern California); Natalia Hryniw (University of Washington); Nate McDowell, Claudia Mora, and Thom Rahn (Earth System Observations, EES-14).

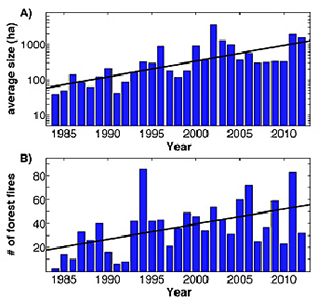

Figure 4. Annual average size (A) and number (B) of forest fires from 1984-2012.

The companion paper, published in the International Journal of Wildland Fire, assesses the correlation between components of water balance and burned areas. The researchers used 30 years (1984-2013) of satellite observations to quantify recent trends and inter-annual variability in the burned area of the Southwest. During the time span, 11% (approximately 5,500 square miles) of Southwestern US forest burned in wildfires, and over half of this area has burned since 2006. Of the forest area burned, over half (approximately 3,200 square miles) has burned in stand-replacing wildfires (i.e. wildfires in which most or the entire living upper canopy layer is killed), as indicated by satellite-derived measurements of burn severity. The annual area of these stand-replacing fires has grown exponentially since 1984, at a rate of greater than 16% per year. The rate of growth is highest in high elevation forests, at a rate of greater than 22% per year (Figure 4).

The authors show that drought very strongly dictates the annual forest fire area. In turn, drought and subsequent wildfires are strongly influenced by the atmosphere’s moisture demand (or vapor-pressure deficit), which is strongly dictated by spring and summer temperatures. Warming has been substantial during these seasons throughout the Southwest. As a result, the atmosphere’s moisture demand has grown substantially in recent decades.

The effect of warming on forest fires in the Southwest is extreme because of a combination of exponential relationships. 1) Temperature has an exponential influence on atmospheric moisture demand, meaning that the effect of temperature variations on drought are strongest in places that are already relatively warm, and the effect gets even stronger as warming occurs. 2) The relationship between atmospheric moisture demand and burned area is exponential, meaning that even if the atmosphere’s moisture demand were to grow steadily, the annual area of forest fires would continue to grow exponentially. Continued warming in the Southwest could cause ever-growing burned-area totals in Southwestern forests until, eventually, forested areas are so fragmented that fuels become limiting, causing annual burned areas to decline.

The findings provide new understanding regarding the nature and strength of the relationships between Southwest wildfire and climate, with implications for seasonal burned area forecasting and future climate-induced wildfire trends. Observed wildfire-climate relationships in the already warm and dry Southwest may provide valuable insight relevant to other regions where the climate is likely to become substantially warmer and drier.

Reference: “Correlations between Components of the Water Balance and Burned Area Reveal New Insights for Predicting Forest Fire Area in the Southwest United States,” International Journal of Wildland Fire 23, (2014); doi: 10.1071/WF14023. Authors include A. Park Williams and Richard Seager (Columbia University), Alison Macalady, Thomas Swetnam, and Michael Crimmins (University of Arizona); David Noone and Max Berkelhammer (University of Colorado), Anna Trugman (Princeton University), Nikolaus Buenning (University of Southern California); Nate McDowell, Claudia Mora, and Thom Rahn (Earth System Observations, EES-14); and Natalia Hryniw (University of Washington).

The Laboratory Directed Research and Development (LDRD) program and the DOE Biological and Environmental Research program funded different aspects of the work. The research supports the Lab’s Energy Security and Global Security mission areas and the Science of Signatures and Information, Science, and Technology science pillars through the ability to measure and simulate the inter-relationships between climate and wildfire. Technical contact: Thom Rahn

LANSCE

LANSCE resumes 120-Hz operations

The Los Alamos Neutron Science Center (LANSCE) resumed 120-Hz operations in early December, providing the neutron flux to meet a Level 2 NNSA milestone and enabling faster completion of a variety of nuclear physics experiments running at the accelerator-based user facility. LANSCE provides the scientific community with intense sources of neutrons for experiments supporting civilian and national security research.

The resumption is the result of LINAC Risk Mitigation activities. Improvements include a new, fully designed radio frequency (RF) power amplifier, new water systems for the drift tube linac, and upgrades to the linear accelerator control systems. Scientists, engineers, and technicians from Accelerator Operations and Technology (AOT) Division designed, tested, and installed the sophisticated systems. The result enabled a return to high-power operations that had ceased in 2006 due to aging high-power radio frequency equipment.

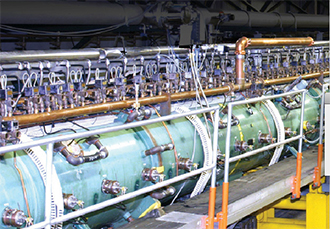

Photo. (Left): The LANSCE linear accelerator (linac).

Photo. The new Diacrode amplifier-based RF systems for the drift tube linac, part of the LINAC Risk Mitigation activities that enabled a return to 120-Hz operations at LANSCE.

The 120-Hz operation also enables the development of new research facilities utilizing the LANSCE LINAC. The improvements are an example of the Laboratory’s LINAC accelerator expertise that could be used to design, build, and operate the MaRIE XFEL, the world’s first very-hard (42-keV) x-ray free-electron laser, which is at the core of the Lab’s proposed Matter-Radiation Interactions in Extremes experimental facility for control of time-dependent material performance.

John Lyles and staff (RF Engineering, AOT-RFE) led the high power RF systems effort; Mechanical Design Engineering (AOT-MDE), Instrumentation and Controls (AOT-IC), and Mechanical and Thermal Engineering (AET-1) staff performed water systems and instrumentation and control work.

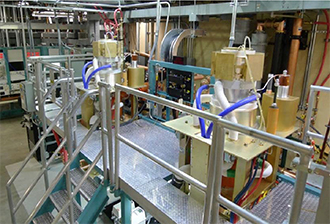

Photos. (Left): Fission Time Projection Chamber. (Right): Chi-Nu Detector Array.

Materials Physics and Applications

Mapping multiple quantum transitions in the antiferromagnet CeRhIn5

Using unusually high-field magnet capabilities at the National High Magnetic Field Laboratory (NHMFL) in Tallahassee, Florida, and at the Pulsed Field Facility in Los Alamos, researchers have discovered two distinct classes of zero-temperature transitions called quantum critical points (QCPs). The study provides significant progress toward deriving a universal phase diagram for quantum critical points. Proceedings of the National Academy of Sciences of the United States of America published the research.

This work is important for understanding and developing theories about materials whose electronic and magnetic properties are controlled by quantum fluctuations that develop because of Heisenberg’s uncertainty principle. One important property that can emerge is unconventional superconductivity, which appears when a magnetic transition is tuned to zero-temperature by applying pressure to the heavy-fermion metal CeRhIn5. The CeRhIn5 and related materials display quantum-driven continuous phase transitions at absolute zero temperature, but scientists have not been able to determine whether these phase transitions exhibit universal behavior.

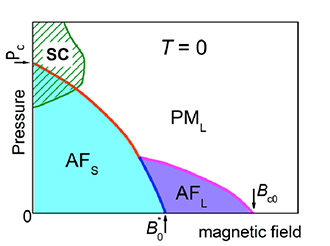

The researchers, which included LANL scientists and international collaborators, used short-phase 75 tesla fields at Los Alamos and the hybrid 45-tesla magnet in Tallahassee for the work reported in the publication. They took measurements of heat capacity and de Haas-van Alphen (dHvA) oscillations at low temperatures across a field-induced antiferromagnetic quantum-driven continuous phase transitions (magnetic field Bc0 ≈ 50 T) in the heavy-fermion metal CeRhIn5. The team detected a sharp, magnetic-field-induced change in Fermi surface at B0* » 30 T, well inside the antiferromagnetic phase. This sharp change is unexpected at a usual type of quantum-driven continuous phase transition. This is the first observation of a sharp Fermi surface reconstruction while applying a strong magnetic field to suppress an antiferromagnetic transition to zero temperature.

Figure 5. Zero-temperature pressure-magnetic field phase diagram. Experimentally determined quantum critical points Pc, B0* and Bc0 are compared to a multi-parameter theoretical phase diagram illustrated by the solid curves. Pc: pressure at which the antiferromagnetic transition reaches zero-temperature in the absence of an applied magnetic field, B0*: critical magnetic field where there is a change at zero-temperature from a small to large Fermi surface inside the antiferromagnetically ordered phase, Bc0: critical magnetic field needed to suppress the antiferromagnetic transition to zero-temperature without applied pressure, SC: superconductivity, AF: antiferromagnetism with small (s) and large (L) Fermi surfaces, PML: magnetically disordered with large Fermi surface.

Comparisons with electronic-structure calculations and properties of the closely related material CeCoIn5 suggest that the Fermi-surface change at B0* is associated with a localized to itinerant transition of the Ce-4f electrons in CeRhIn5. Taken in conjunction with earlier pressure experiments, their results demonstrate that at least two distinct classes of quantum-driven continuous phase transitions are observable in CeRhIn5: one with applying pressure and others with applying a magnetic field. This is consistent with the theoretically predicted universal phase diagram, which indicates that the two classes of quantum-driven continuous phase transitions are connected. This finding must be tested in future experiments that use simultaneously high pressures and very high magnetic fields at the National High Magnetic Field Laboratory. These experiments demonstrate that direct measurements of the Fermi surface can distinguish theoretically proposed models of quantum criticality and point to a universal description of quantum phase transitions.

Reference: “Fermi surface reconstruction and multiple quantum phase transitions in the antiferromagnet CeRhIn5,” Proceedings of the National Academy of Sciences of the United States of America 112, 638 (2015); doi: 10.1073/pnas.1413932112. Los Alamos authors are Yoshitaka Kohama, Eric Bauer, John Singleton, Marcelo Jaime, and Joe Thompson (Condensed

Matter and Magnet Science, MPA-CMMS); and Jianxin Zhu (Physics of Condensed Matter and Complex Systems, T-4). The collaboration included researchers from Zhejiang University in China, Sungkyunkwan University in South Korea, Max Planck Institute for Chemical Physics of Solids in Germany, Rice University, the National High Magnetic Field Laboratory at Florida State University, and Los Alamos.

The DOE Office of Science project Complex Electronic Materials and the LANL Laboratory Directed Research and Development (LDRD) program funded different aspects of the research at Los Alamos. The National Science Foundation, State of Florida, and the DOE Basic Energy Sciences program Science at 100 T sponsored the National High Magnetic Field Laboratory. The work supports the Lab’s Energy Security mission area and the Materials for the Future science pillar by pursuing the science required to discover and understand complex and collective forms of matter that exhibit novel properties and respond in new ways to environmental conditions, enabling the creation of materials with innate functionality, such as superconductivity. Technical contact: Joe Thompson

Materials Science and Technology

A quantification and certification paradigm for additively manufactured materials

Additive manufacture (AM) is the process of joining materials to make objects from 3-D model data, usually layer upon layer, as opposed to current subtractive manufacturing methodologies. Additive manufacturing is an agile model for designing, producing, and implementing the process-aware materials of the future. However, no “ASTM-type” additive manufacturing certified process or AM-material produced specifications exist. Therefore, the additive manufacturing certification and qualification paradigm needs to evolve. For example, even for small changes in such variables as starting feed material (powder or wire), component geometry, build process, and post-build thermo-mechanical processing, the qualification cycle can be complicated–leading to long implementation times.

In large part, this is because researchers cannot predict and control the processing-structure-property-performance relationships in additively manufactured materials at present. Metallic-component certification requirements have been documented elsewhere for specific materials. The requirements generally involve meeting engineering and physics requirements tied to the functional performance requirements of the engineering component, and process and product qualification. Key microstructural parameters and defects need to be quantified in order to establish minimum performance requirements.

As part of an NNSA complex-wide effort researching techniques toward a certification and qualification process for additively manufactured materials, Los Alamos researchers compared and quantified the constitutive behavior of an additively manufactured stainless steel in the as-built condition with that of the same that had undergone recrystallization and yet again to a conventionally manufactured annealed and wrought stainless steel.

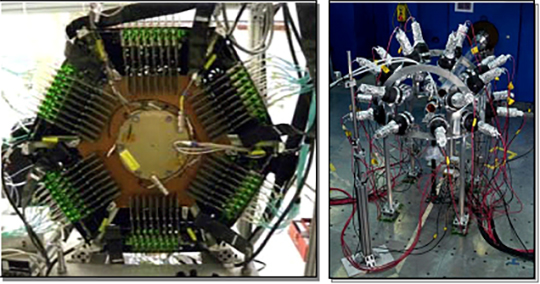

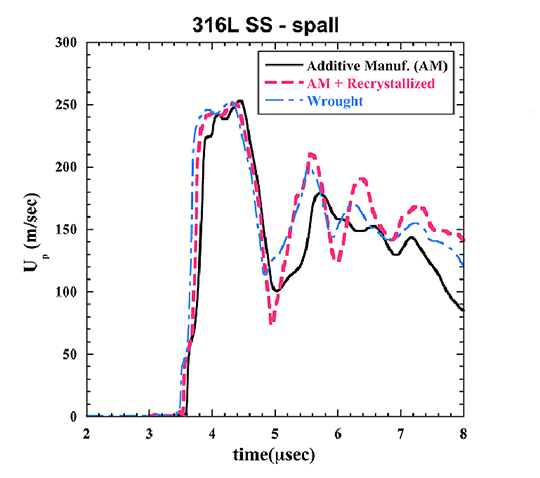

Figure 6. Initial spallation testing indicates significant dynamic ductility.

In this initial study, researchers produced cylinders of 316L stainless steel (SS) using a LENS MR-7 laser additive manufacturing system from Optomec (Albuquerque, NM) equipped with a 1kW Yb-

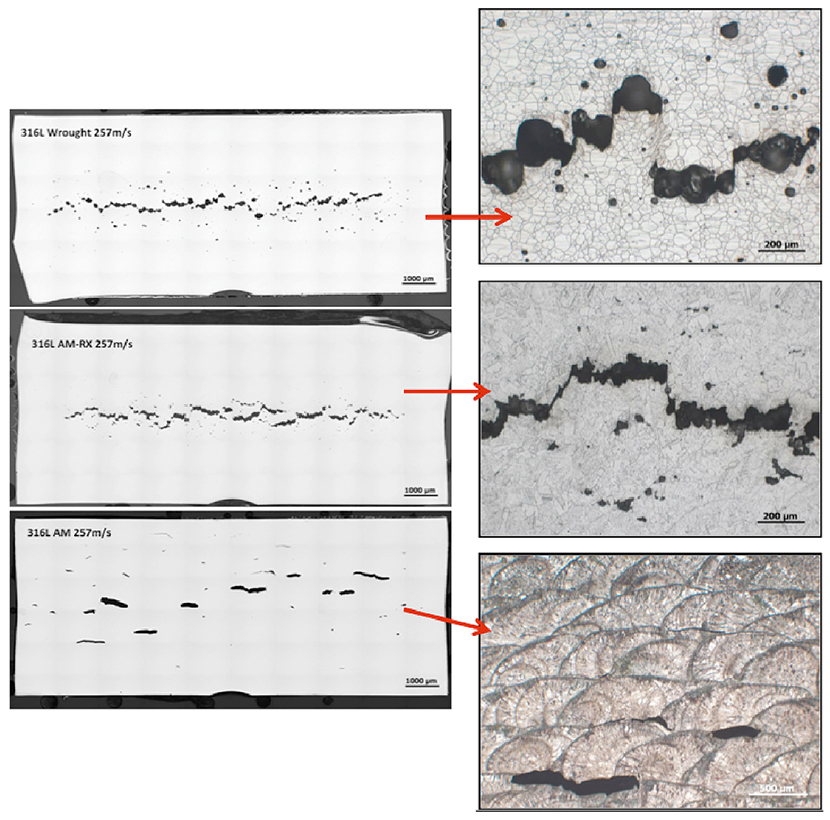

fiber laser. The team characterized the microstructure of the additively manufactured-316L SS in both the as-built condition and following heat-treatments to obtain full recrystallization. The scientists measured constitutive behavior as a function of strain rate and temperature and compared it with that of nominal annealed wrought 316L SS plate. They probed the dynamic damage evolution and failure response of all three materials using flyer-plate impact driven spallation experiments at two peak stress levels, 4.3 and 6.2 GPa, to examine incipient and full spallation response.

The spall strength of wrought 316L SS did not vary for the two-peak shock stresses studied. The AM-316L SS spall strengths, in the as-built and following recrystallization, decreased with increasing peak shock stress. Researchers are characterizing the damage evolution as a function of microstructure and peak shock stress through optical metallography, electron-back-scatter diffraction, and scanning-electron microscopy methods. The experimental results demonstrate that the macroscopic spall strength of the additively manufactured 316L SS is quite similar to that of wrought 316L SS. However, the details of the damage evolution, as controlled by the complex microstructure in the additive manufactured-material, are substantially different.

Figure 7. The results of flyer-plate impact-driven spallation on (from top) conventionally manufactured annealed and wrought stainless steel, additively manufactured stainless steel that has been recrystallized, and the as-built additively manufactured 316L SS. The optical microscopy results show void formation and damage along solidification boundaries in the as-built additively manufactured steel, classic spherical void nucleation, growth, and shear coalescence in the wrought steel; and reduced void formation and shear in the recrystallized additively manufactured steel

The results of their mechanical behavior and spallation testing reinforce the concept that additive manufacturing will force a shift from “material” qualification (ASTM) to science-based qualification and certification. Researchers presented the initial results of the spallation investigation on 316L-SS during the complex-wide JOWOG (Joint Working Group) on additive manufacturing held at Lawrence Livermore National Laboratory in October.

The work is part of the qualification and certification research thrust of a DOE additive manufacturing initiative that includes Los Alamos National Laboratory, Sandia National Laboratories, Lawrence Livermore National Laboratory, Kansas City Plant, and Savannah River Site. Using materials additively manufactured at these sites, Los Alamos is conducting further fundamental dynamic spall tests and characterizing the resulting structure/property relations. Los Alamos participants included G.T. (Rusty) Gray III (Materials Science in Radiation and Dynamics Extremes, MST-8) who serves as principal investigator for the complex-wide program and the LANL activities, John Carpenter and Thomas Lienert (Metallurgy, MST-6), and Veronica Livescu, Carl Trujillo, Shuh-Rong Chen, Carl Cady, Saryu Fensin, and Danial Martinez (MST-8).

Science Campaign 2 (LANL Program Manager Russell Olson, acting) and the Joint Munitions Program (LANL Program Manager Tom Mason) funded the research. The work supports the Laboratory’s Nuclear Deterrence mission area and Materials for the Future science pillar through the development of additive manufacturing methods to produce designed materials with desired structure/property relations. Future facilities, such as MaRIE (Matter-Radiation Interactions in Extremes), could build upon this research linking process-aware materials behavior to performance by enabling in-situ quantification of deformation and damage evolution during dynamic loading. This information could be used in the qualification and certification paradigm for additively manufactured materials. Technical contact: Rusty Gray

Physics

First beryllium capsule experiment successfully completed on world’s largest laser

Inertial confinement fusion (ICF) experiments on the National Ignition Facility (NIF) are designed to implode a spherical capsule containing deuterium-tritium (DT) fuel, which compresses and heats the fuel to initiate thermonuclear burn in a hotspot at the center of the capsule. NIF uses laser beams to implode the fuel to cause nuclear fusion reactions for stockpile stewardship and energy production research. Scientists completed the first beryllium capsule experiment on the National Ignition Facility. This event commenced the experimental portion of the Beryllium Ablator Campaign in which Los Alamos National Laboratory’s inertial confinement fusion program has a leading role. This shot was also the first shot on NIF with beryllium in the target. The team believes that the higher ablation velocity, rate, and pressure of beryllium ablators compared with their plastic carbon-based counterparts could improve capsule performance at NIF.

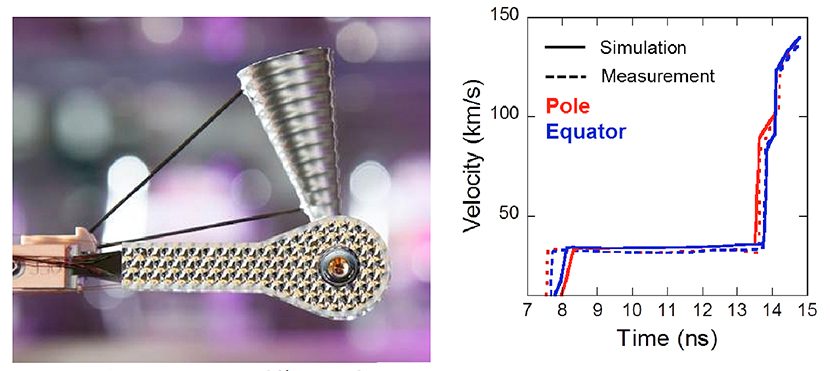

The Beryllium Ablator Campaign aims to compare results from capsules with different material and ablation properties and to locate deficiencies in physics models. Beryllium capsules are the first non-carbon ablators to be examined. The experiment had two objectives: 1) obtaining backscatter data to evaluate the laser damage risk before ramping up the power and energy, and 2) measuring the first shock timing data to benchmark simulations. The experiment used a keyhole target (Figure 8a) designed to measure shock propagation properties with the VISAR diagnostic. This enabled the team to address both experimental goals. The laser backscatter measurements indicate nearly zero backscatter. This result will allow the use of higher laser power and energy for the next shot, which will be a gas-filled beryllium capsule implosion.

Figure 8. a) The keyhole target with beryllium, a capsule used to measure shock speed and timing (Photo courtesy of Jason Laurea) and b) breakout and velocities of first and second shocks for post-shot simulations and experimental data show the ability of simulations to reproduce the experimental data.

The researchers have completed post-shot simulations of the shock breakout and timing and have compared them with the experimental data (Figure 8b). The pre-shot simulations used information from experiments with plastic (CH) ablators. The beryllium team is examining the differences between the calculations and data for the two different ablator materials.

A truly national effort, credit for the successful experiment is given to the NIF operations team for its efforts preparing for beryllium operations, Los Alamos National Laboratory and Lawrence Livermore National Laboratory (LLNL) program management’s support of LANL’s leading role in this campaign, support from LLNL collaborators on the experimental design, LLNL’s target fabrication for assembly of the target, and General Atomics staff for their efforts for manufacturing and developing the techniques to manufacture the beryllium capsules.

The Los Alamos researchers are Andrei Simakov, Douglas Wilson, Austin Yi, Richard Olson, and Natalia Krasheninnikova (Plasma Theory and Applications, XCP-6); John Kline, George Kyrala, and Theodore Perry (Plasma Physics, P-24); and Steve Batha (Physics, P-DO). More information: https://lasers.llnl.gov/news/experimental-highlights/2014/august

NNSA Campaign 10 (Inertial Confinement Fusion, LANL Program Manager Steve Batha) funded the work, which is part of the Lab’s science campaigns that use NIF to execute high energy density experiments in support of stockpile stewardship. The research supports the Lab’s Nuclear Deterrence and Energy Security mission areas, and the Nuclear and Particle Futures science pillar. Technical contact: John Kline

Research Library

Research Library investigates “reference rot” on the web

The emergence of the web has fundamentally affected most aspects of information communication, including scholarly communication. The immediacy that characterizes publishing information to the web, as well as accessing it, enables a dramatic increase in the speed of dissemination of scholarly knowledge. However, the transition from a paper-based to a web-based scholarly communication system also poses challenges. Los Alamos researchers and collaborators at the University of Edinburgh examined “reference rot”, the combination of link rot and content drift to which references to web resources included in Science, Technology, and Medicine (STM) articles are subject. The team investigated the extent to which reference rot impacts the ability to revisit the web context that surrounds STM articles some time after their publication. The journal PLoS ONE published their findings.

Citation of sources is a fundamental aspect of scholarly discourse. In the past, these references were published articles or books. Today’s web-based scholarly communication includes links to an increasingly vast range of materials. Many of these are resources needed or created in research activity such as software, datasets, websites, presentations, blogs, videos etc. as well as scientific workflows and ontologies. These resources often evolve over time, unlike traditional scholarly articles. This highly dynamic nature poses a significant challenge: the content at the end of any given http:// link is liable to change over time. The issue is two-fold: a link may no longer work or the content referenced has dramatically changed from what it was originally. As a result, what is online at the time of citation is less likely to be there when scholars wish to look up the citation. The reference rot problem occurs whenever the original version of a linked resource is not available any more.

The team examined vast collection of articles from three corpora (arXiv, Elsevier, and PubMed Central) that span the publication years 1997 to 2012. For over one million references to web resources extracted from over 3.5 million articles, the researchers determined whether the HTTP URI is still responsive on the live web and whether web archives contain an archived snapshot representative of the state the referenced resource had at the time it was referenced. The scientists used the Memento Protocol, which extends the HTTP protocol with datetime negotiation, a variant of content negotiation.

The researchers observed that the fraction of articles containing references to web resources is growing steadily over time. They discovered that one out of five STM articles suffers from reference rot, meaning that it is impossible to revisit the web context that surrounds them some time after their publication. When only considering STM articles that contain references to web resources, this fraction increases to seven out of ten. The researchers suggest that robust solutions to combat the reference rot problem are required to safeguard the long-term integrity of the web-based scholarly record.

Photo. (Standing, from left): Los Alamos National Laboratory authors Lyudmila Balakireva, Herbert Van De Sompel, and Harihar Shankar. (Left computer screen): Martin Klein; (right computer screen): Robert Sanderson. (Center computer screen): The graphic shows links from the three STM corpora that were studied (right hand side) to the six top level domains they link to (left hand side). The colored portions shows links that are healthy. The grey portions - by far the largest - show links that are infected by reference rot.

The researchers suggest actions to make the brittle links on the web more robust. Authors of web pages should: 1) create a snapshot of a resource in a web archive when linking to a resource and 2) decorate the link to the resource to contain the resource’s original URI, the datetime of linking to the resource, and the URI of the snapshot in a web archive. There are no tools to automate this yet. Web page users should employ a browser that understands the link decorations that authors put in place. The Chrome browser, equipped with the Memento extension for Chrome, is currently the only tool that supports navigating decorated links: (https://chrome.google.com/webstore/detail/memento-time-travel/jgbfpjledahoajcppakbgilmojkaghgm?hl=en&gl=US)

Reference: “Scholarly Context Not Found: One in Five Articles Suffers from Reference Rot,” PLoS ONE 9(12): e115253 (2014); doi:10.1371/journal.pone.0115253. Authors include Martin Klein, Herbert Van de Sompel, Robert Sanderson, Harihar Shankar, and Lyudmila Balakireva (Research Library, SRO-RL); Ke Zhou and Richard Tobin (University of Edinburgh).

The two-year Hiberlink project, funded by the Andrew W. Mellon Foundation, sponsored the work. Hiberlink investigates how web links in online scientific and other academic articles fail to lead to the resources that were originally referenced, assesses the extent of reference rot, and explores solutions to mitigate the problem. The project is a collaboration between the University of Edinburgh and Los Alamos National Laboratory. The Hiberlink project aims to identify practical solutions to the reference rot problem, and to develop approaches that can be integrated easily in the publication process. The project works with academic publishers and other web-based publication venues to ensure more effective preservation of web-based resources in order to increase the prospect of continued access for future generations of researchers, students, and their teachers.

This research would have been impossible without the Memento “Time Travel for the Web” protocol (RFC 7089) and infrastructure that the LANL team and colleagues at Old Dominion University devised, and without the adoption of that protocol by web archives, worldwide. The Hiberlink project builds directly upon a pilot study from Los Alamos National Laboratory powered by their Memento Time Travel for the Web technology and available at http://arxiv.org/abs/1105.3459.

The work supports the Laboratory’s mission areas and the Information, Science, and Technology science pillar through the ability to access scholarly knowledge on the web. Technical contact: Herbert Van de Sompel